-

Notifications

You must be signed in to change notification settings - Fork 257

AutoMQ SSL Security Protocol Configuration Tutorial

AutoMQ[1] is a cloud-native stream processing system redesigned to maintain 100% compatibility with Apache Kafka[2]. By decoupling storage to Object storage, AutoMQ significantly enhances cost efficiency and elasticity. Specifically, AutoMQ offloads storage to shared cloud storage EBS and S3 through the stream storage library S3Stream built on S3, providing low-cost, low-latency, high-availability, high-durability, and infinite-capacity stream storage capabilities. Compared to the traditional Shared Nothing architecture, AutoMQ adopts a Shared Storage architecture, significantly reducing storage and operational complexity while improving system elasticity and reliability.

Thanks to AutoMQ's 100% compatibility with Kafka, the implementation of security authentication configuration is the same for both AutoMQ and Kafka. This article will guide you on how to securely start AutoMQ using SSL.

The first section of this article will configure the SSL security protocol for AutoMQ based on self-signed SSL certificates. If you are not well-versed with the SSL protocol itself, understanding the following knowledge before proceeding further would be beneficial.

-

Key Pair: Typically consists of a public key and a private key. The public key can be openly distributed and is used to encrypt data or verify digital signatures, while the private key must be securely stored and is used to decrypt data encrypted by the public key or to create digital signatures.

-

Digital Signature: The product of encrypting a message digest (usually a hash value) with a private key.

-

Certificate Signing Request (CSR): Used to generate a digital signature certificate. The CSR includes the applicant's public key and identity information (organization name, domain name, etc.).

-

Trusted Signature Certificate: The product of a CSR being digitally signed by a publicly trusted institution using their private key.

-

Self-Signed Certificate: The product of a CSR being digitally signed using the private key, where the public key included in the CSR and the private key used for signing belong to the same institution or individual.

-

Truststore and Keystore: In this article, both correspond to the .jks files we generate. They can refer to the same file, but in actual development, it is recommended to configure the truststore and keystore separately.

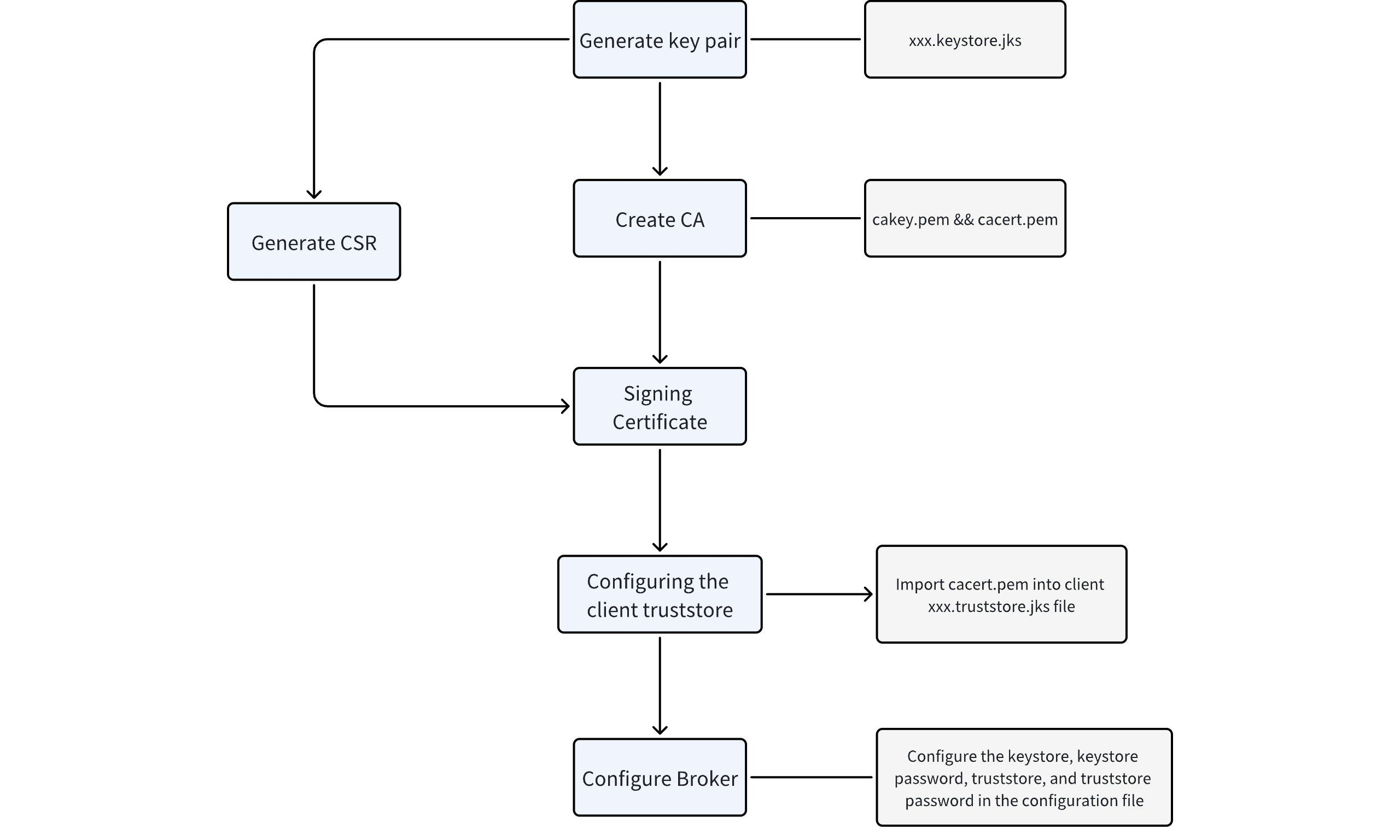

The first step in deploying one or more SSL-supported Brokers is to generate a pair of public and private keys for each server. We will use Java's keytool command to generate key pairs for the Brokers you need to configure.

keytool -keystore {keystorefile} -alias {localhost} -validity {validity} -genkey -keyalg RSA [-ext SAN=DNS:{FQDN},IP:{IPADDRESS1}]

Configuration Parameter Meanings:

-

keystore: Specifies the location and name of the keystore file.

-

alias: Assigns an alias to the key entry to uniquely identify this key within the keystore.

-

validity: Sets the number of days the self-signed certificate will be valid.

-

genkey: Indicates that a new key pair will be generated.

-

keyalg: Specifies the algorithm used by the key pair.

-

ext: Additional information.

To add hostname information in the certificate (for later hostname verification), use the extension parameter -ext SAN=DNS:{FQDN},IP:{IPADDRESS1}.

This article example:

keytool -keystore server.keystore.jks -alias localhost -validity 365 -genkey -keyalg RSA

After usage, an RSA algorithm-generated key pair will be created and stored in a file named server.keystore.jks. The key pair's alias will be localhost, and the certificate signed with it will have a validity period of 365 days. If the file does not exist, it will be generated at the specified path, and you will be prompted to set a password and various personal information.

When "hostname verification" is enabled, the certificate provided by the connected server is checked against the server's actual hostname or IP address to ensure that the connection is made to the correct server.

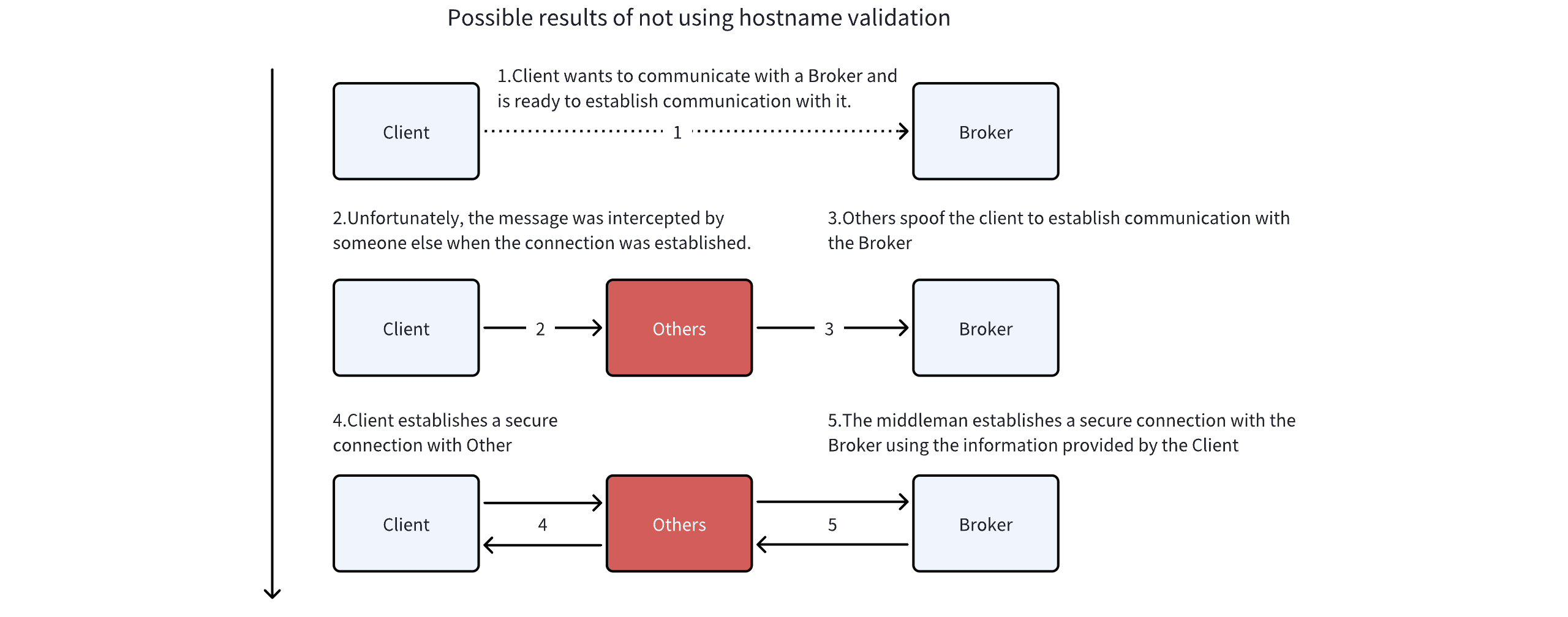

The primary purpose of such checks is to prevent man-in-the-middle attacks (as illustrated below). Starting from Kafka version 2.0.0, when we start the Kafka service using the SSL security protocol, hostname verification on the server is enabled by default.

If the client has enabled hostname verification, it will validate the server's Fully Qualified Domain Name (FQDN) or IP address against one of the following two fields.

-

Common Name (CN)

-

Subject Alternative Name (SAN)

Although Kafka checks these two fields, since 2000, using the common name (CN) field for hostname verification has been deprecated and should be avoided whenever possible. Additionally, the SAN field is more flexible, allowing multiple DNS and IP entries to be declared within a single certificate. In the previous subsection, we provided a configuration scheme for such declarations.

Configuring hostname verification for services is evidently one of the best practices to ensure secure communication in Kafka. However, if you simply want to quickly start a Kafka service configured with the SSL protocol, you can set the ssl.endpoint.identification.algorithm property in the configuration file to an empty string to disable server hostname verification. This article will also temporarily disable this property to allow readers to quickly start a broker with SSL enabled, while also providing the commands for configuring hostname verification for reference.

After completing the above steps, you need to configure the Kafka server with the SSL protocol. At this stage, each server should already have a key pair, which is the foundation for creating certificates. To enhance authentication features, it is common to submit a signing request to a publicly trusted entity for digital signing. This publicly trusted entity is typically referred to as a Certificate Authority (CA).

A Certificate Authority is responsible for signing certificates. A CA is akin to a government that issues passports (trusted authority). The government stamps (digitally signs) each passport, making it difficult to forge. Others will verify the stamp to ensure the passport's authenticity. Cryptography ensures that certificates signed by the CA are computationally infeasible to forge. Therefore, as long as the CA is a genuine and trustworthy entity, people can be assured they are connecting to the server they intend to connect to.

In the first part of this tutorial, we will complete the configuration using a self-created CA. In enterprise environments, when setting up production clusters, certificates are typically signed by a trusted internal enterprise CA. How to configure certificates signed by others will be explained in the second part of this article.

Next, we will use OpenSSL to generate the CA and sign certificates. This cryptographic library includes major cryptographic algorithms and common key and certificate management functions. Please install it according to your machine type; specific installation steps are not elaborated here.

Now, you should prepare the initial files as follows (you can skip this configuration if hostname verification is not required):

ca/

├── openssl.cnf

├── serial.txt

├── index.txt

Below is the openssl.cnf configuration file used in this example of OpenSSL. You can adjust its parameters based on your needs. The serial.txt and index.txt files are used to track which certificates are issued by the CA. If not needed, you can comment out this configuration in the following configuration file.

Due to a bug in OpenSSL, the x509 module does not copy the requested extension fields from the certificate request to the final certificate. Creating a CA requires specifying the config to ensure that the extension fields are copied to the final certificate.

HOME = .

RANDFILE = $ENV::HOME/.rnd

####################################################################

[ ca ]

default_ca = CA_default # The default ca section[ CA_default ]

base_dir = .

certificate = $base_dir/cacert.pem # The CA certificate

private_key = $base_dir/cakey.pem # The CA private key

new_certs_dir = $base_dir # Location for new certs after signing

database = $base_dir/index.txt # Database index file

serial = $base_dir/serial.txt # The current serial number

default_days = 1000 # How long to certify for

default_crl_days = 30 # How long before next CRL

default_md = sha256 # Use public key default MD

preserve = no # Keep passed DN ordering

x509_extensions = ca_extensions # The extensions to add to the cert

email_in_dn = no # Don't concat the email in the DN

copy_extensions = copy # Required to copy SANs from CSR to cert

####################################################################

[ req ]

default_bits = 4096

default_keyfile = cakey.pem

distinguished_name = ca_distinguished_name

x509_extensions = ca_extensions

string_mask = utf8only

####################################################################

[ ca_distinguished_name ]

countryName = Country Name (2 letter code)

countryName_default = DE

stateOrProvinceName = State or Province Name (full name)

stateOrProvinceName_default = Test Province

localityName = Locality Name (eg, city)

localityName_default = Test Town

organizationName = Organization Name (eg, company)

organizationName_default = Test Company

organizationalUnitName = Organizational Unit (eg, division)

organizationalUnitName_default = Test Unit

commonName = Common Name (e.g. server FQDN or YOUR name)

commonName_default = Test Name

emailAddress = Email Address

emailAddress_default = test@test.com

####################################################################

[ ca_extensions ]

subjectKeyIdentifier = hash

authorityKeyIdentifier = keyid:always, issuer

basicConstraints = critical, CA:true

keyUsage = keyCertSign, cRLSign

####################################################################

[ signing_policy ]

countryName = optional

stateOrProvinceName = optional

localityName = optional

organizationName = optional

organizationalUnitName = optional

commonName = supplied

emailAddress = optional

####################################################################

[ signing_req ]

subjectKeyIdentifier = hash

authorityKeyIdentifier = keyid,issuer

basicConstraints = CA:FALSE

keyUsage = digitalSignature, keyEncipherment

Two commands to generate a CA are as follows:

# When host authentication is required

openssl req -x509 -config openssl.cnf -nodes -keyout cakey.pem -out cacert.pem -days 365

# No host authentication required

openssl req -new -x509 -nodes -keyout cakey.pem -out cacert.pem -days 365

The meaning of each parameter:

-

openssl req: Executes the certificate request operation.

-

x509: Uses the x509 module. X.509 is the standard format for public key certificates in cryptography.

-

config: Specifies the path and name of the OpenSSL configuration file. If not specified, the default path configuration will be used.

-

nodes: Does not encrypt the private key. The generated private key will not be encrypted, so no password is required during operations.

-

keyout: Specifies the path and name for the generated key.

-

out: Specifies the path and name for the generated certificate file.

After executing the command, a key pair cakey.pem and an X.509 format public key certificate cacert.pem will be generated.

The private key in cakey.pem will be used to sign certificates, while cacert.pem needs to be configured in the client's trust store so that the client can use the public key in this certificate to verify whether the server's certificate is signed by this CA. This key pair should be kept very secure; if someone gains access to it, they could create and sign certificates trusted by your infrastructure, which means they could impersonate anyone when connecting to any service that trusts this CA.

Next, we need to add the generated CA public key certificate cacert.pem to the client's trust store so that the client can trust this CA:

keytool -keystore client.truststore.jks -alias CARoot -import -file cacert.pem

# This truststore operation is client-side, where we put our generated CA certificate into the client's trust chain.

New Parameter Descriptions:

-

import: Indicates that a certificate is to be imported.

-

file: Specifies the path and name of the certificate file to be imported.

This command means that the certificate cacert.pem will be imported into the client's trust store client.truststore.jks and identified with the alias CARoot.

Note: If you set ssl.client.auth to "requested" or "required" in your Kafka Brokers configuration to demand client authentication, you must also provide a truststore for Kafka Brokers. This truststore should include the CA certificate that signs the client's certificate. Here, our client's certificate is signed by our self-generated CA, so we can directly import our CA certificate into the server.

keytool -keystore server.truststore.jks -alias CARoot -import -file cacert.pem

# This truststore operation is conducted on the server side, importing the CA certificate that signs the client's certificate into the server's trust chain. If the ssl.client.auth attribute is not set, this operation is unnecessary.

When ssl.client.auth is also set, we establish SSL mutual authentication. This means that when the client and server connect, not only does the client need to validate the server's certificate, but the server must also validate the client's certificate. Secure communication channels can only be established for data transfer once both parties have successfully authenticated each other. This tutorial uses one-way authentication deployment, meaning the server will not validate the client's certificate.

Now, we want to configure the server with a CA-signed certificate. First, we need to create a Certificate Signing Request (CSR) using the key pair generated for the server in the first step. We'll continue using the keytool utility for this.

keytool -keystore ssl.keystore.jks -alias localhost -certreq -file cert-file

# cert-file即我们生成的csr文件

Then, use the CA we created to sign the certificate (with or without host verification):

# When host authentication is not required.

openssl x509 -req -CA cacert.pem -CAkey cakey.pem -in cert-file -out cert-signed -days 3650 -CAcreateserial -passin pass:123456

Parameter explanation:

-

x509: An OpenSSL sub-command used for handling X.509 certificates.

-

req: Indicates that the input should be treated as a certificate request.

-

CA: Specify the CA certificate file for signing certificate requests.

-

CAkey: Specify the private key file of the CA for signing certificate requests.

-

in: Specify the certificate request file to be signed.

-

out: Specify the output file for the generated signed certificate.

-

CAcreateserial: Indicates the creation of a serial number file and adds it to the CA certificate to track signed certificates.

-

passin pass:123456: Specify the password to unlock the private key, here the password is "123456".

# When host authentication is not required.

openssl ca -config openssl.cnf -policy signing_policy -extensions signing_req -out cert-signed -in cert-file

Parameter explanation:

-

openssl ca: Call OpenSSL's CA functionality to sign Certificate Signing Requests (CSR) and generate certificates.

-

config: Specify the path and name of the configuration file.

-

policy: Specify the policy for issuing certificates.

-

extensions: Specify extensions if set in the configuration.

After executing the command, a server certificate will be generated and saved as cert-signed.

Finally, the signed certificate needs to be imported into the keystore.

keytool -keystore ssl.keystore.jks -alias localhost -import -file cert-signed

Additionally, if the cluster is configured with SSL, each node will have an ssl.keystore.jks file, which contains the key pair and signed certificate for that node.

All Clients and Brokers can use the same truststore since it does not contain any sensitive information.

If internal broker communication does not require SSL, only the following configuration is needed:

# Enable the following setting to validate the client

# SSL.client.auth=required

# If SSL is not required between brokers, both SSL and PLAINTEXT need to be configured

listeners=PLAINTEXT://host.name:port,SSL://host.name:port

# If SSL is also used between brokers, it needs to be configured.

# security.inter.broker.protocol=SSL

# listeners=SSL://host.name:port

ssl.keystore.location=/root/automq/ssl/ssl.keystore.jks

ssl.keystore.password=123456

# Private keys can be provided in an unencrypted format, so there is no need to configure a password

ssl.key.password=123456

# The following two configurations are for two-way SSL, i.e., when the server needs to verify the client's identity

ssl.truststore.location=/root/automq/ssl/ssl.truststore.jks

# When there is no password, this configuration can be skipped

ssl.truststore.password=123456

# Set the following parameter to disable hostname verification

ssl.endpoint.identification.algorithm=

Optional settings:

-

ssl.client.auth=none (Optional values are "required", indicating client authentication is needed and the client must provide a valid certificate; and "requested", indicating client authentication is needed but clients without certificates can still connect. Using "requested" is discouraged as it gives a false sense of security and misconfigured clients will still connect successfully.)

-

ssl.enabled.protocols=TLSv1.2,TLSv1.1,TLSv1 (Lists the SSL protocols to accept from clients. Note that SSL is deprecated in favor of TLS, so using SSL in production is not recommended.)

To enable SSL for broker-to-broker communication, add the following to the server.properties file:

security.inter.broker.protocol=SSL

Starting with Kafka 2.0.0, hostname verification is enabled by default for both client connections and broker-to-broker connections. This can be disabled by setting SSL.endpoint.identification.algorithm to an empty string.

client-ssl.properties file

security.protocol=SSL

ssl.truststore.location=/root/automq/ssl/ssl.keystore.jks

ssl.truststore.password=123456

# Set the following parameter to disable hostname verification

ssl.endpoint.identification.algorithm=

bin/kafka-server-start.sh /root/automq/config/kraft/ssl.properties

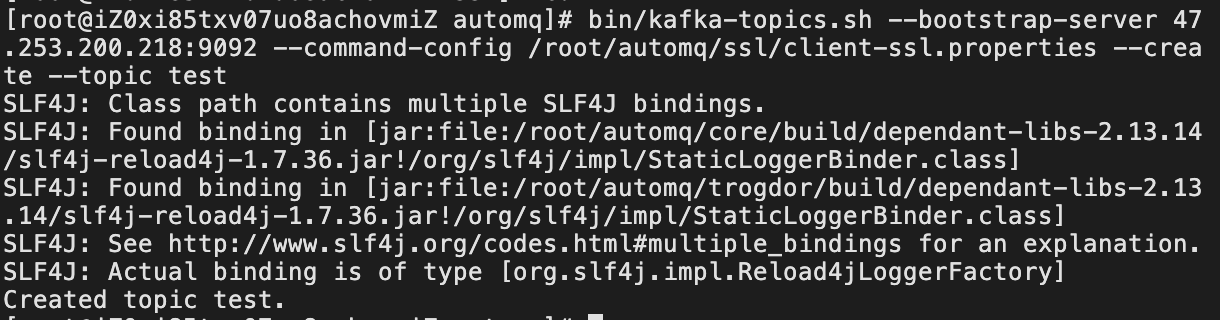

Create a Topic:

bin/kafka-topics.sh --bootstrap-server 47.253.200.218:9092 --command-config /root/automq/ssl/client-ssl.properties --create --topic test

Creation successful

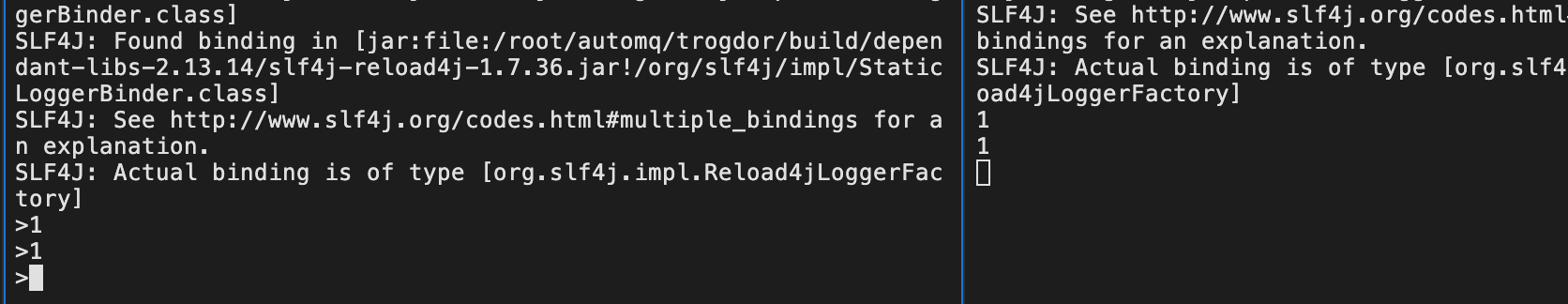

Producer & Consumer testing:

bin/kafka-console-producer.sh --bootstrap-server 47.253.200.218:9092 --topic test --producer.config /root/automq/ssl/client-ssl.properties

bin/kafka-console-consumer.sh --bootstrap-server 47.253.200.218:9092 --topic test --consumer.config /root/automq/ssl/client-ssl.properties

Message sent and received successfully

At this point, all SSL-related configurations have been completed. The overall process is illustrated in the following figure:

Typically, cloud providers like Alibaba Cloud offer SSL certificate services, allowing users to apply for certificates for their existing domains. Once the application is approved, the platform provides various certificate download options, including methods to download root certificates. This tutorial is based on the certificate configuration provided by Alibaba Cloud.

The JKS file downloaded from the cloud provider contains all the information we need, such as the digital signature certificate and key pair. We can obtain a file like automq.space.jks through the download, which can be configured directly as follows:

# Enable the following setting to validate the client

# SSL.client.auth=required

# If SSL is not required between brokers, both SSL and PLAINTEXT need to be configured

listeners=PLAINTEXT://host.name:port,SSL://host.name:port

# If SSL is also used between brokers, settings need to be configured

# security.inter.broker.protocol=SSL

# listeners=SSL://host.name:port

ssl.keystore.location=/root/automq/ssl/automq.space.jks

ssl.keystore.password=mhrx2d7h

# Private keys can be provided in an unencrypted format, so there is no need to configure a password

ssl.key.password=mhrx2d7h

ssl.truststore.location=/root/automq/ssl/automq.space.jks

ssl.truststore.password=mhrx2d7h

# Set the following parameter to disable hostname verification

ssl.endpoint.identification.algorithm=

client-ali-ssl.properties file

security.protocol=SSL

ssl.truststore.location=/root/automq/ssl/automq.space.jks

ssl.truststore.password=mhrx2d7h

# Set the following parameter to disable hostname verification

ssl.endpoint.identification.algorithm=

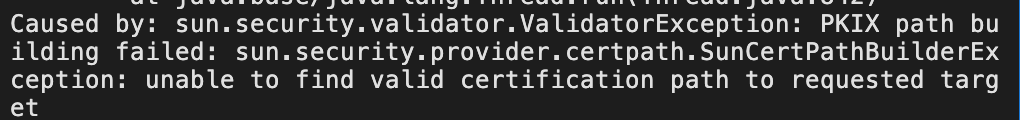

If we attempt to connect at this point, we might encounter the following error:

This usually indicates that the client is unable to validate the certificate provided by the server. This is because the necessary certificates are missing from the client's trust store; specifically, the CA certificate that signed the server's certificate is not in our client's trust store. Here, we need to download the root certificate provided by the cloud provider to configure the client. The specific method varies by cloud provider, so readers will need to explore this on their own. For detailed information, please refer to the relevant Alibaba Cloud certificate documentation.

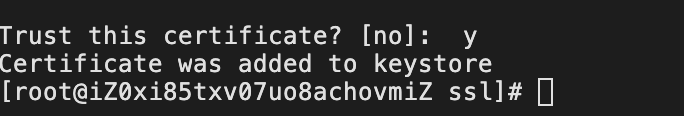

The import method still uses keytool for importing.

keytool -import -file /root/automq/ssl/DigicertG2ROOT.cer -keystore client.truststore.jks -alias root-certificate

Rewrite the client-ali-ssl.properties file

security.protocol=SSL

ssl.truststore.location=/root/automq/ssl/client.truststore.jks

ssl.truststore.password=123456

# Set the following parameter to disable hostname verification

ssl.endpoint.identification.algorithm=

bin/kafka-server-start.sh /root/automq/config/kraft/ssl.properties

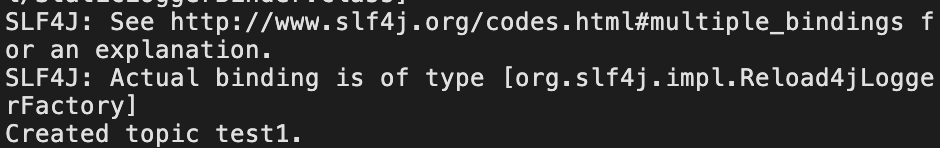

Create a Topic:

bin/kafka-topics.sh --bootstrap-server 47.253.200.218:9092 --command-config /root/automq/ssl/client-ali-ssl.properties --create --topic test1

Creation successful

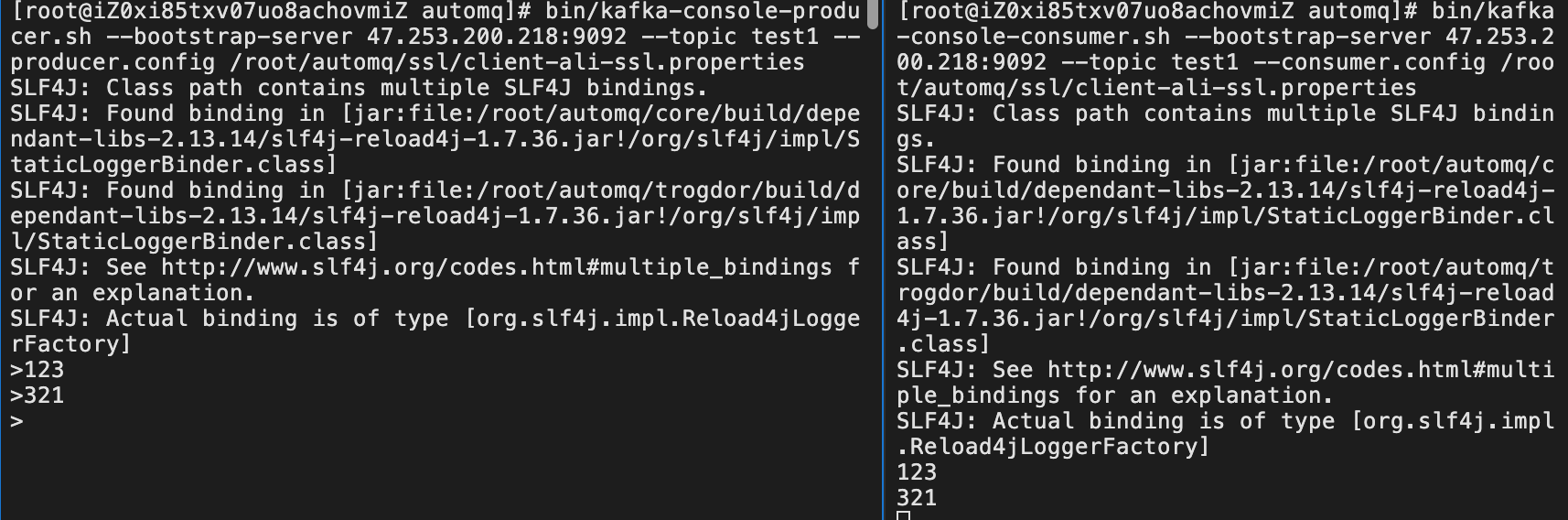

Producer & Consumer testing:

bin/kafka-console-producer.sh --bootstrap-server 47.253.200.218:9092 --topic test1 --producer.config /root/automq/ssl/client-ali-ssl.properties

bin/kafka-console-consumer.sh --bootstrap-server 47.253.200.218:9092 --topic test1 --consumer.config /root/automq/ssl/client-ali-ssl.properties

Message sent and received successfully

Using certificates provided by cloud providers involves significantly fewer steps compared to self-signed certificates. We can directly obtain the root certificate and the signed certificate from the cloud provider and configure them on the server. It's important to note that a self-built client does not naturally possess the root certificate, requiring us to download it from the cloud provider and import it into our own trust store. Otherwise, the SSL connection will fail.

References:

[1] AutoMQ: https://www.automq.com

[2] Apache Kafka: https://kafka.apache.org/

[3] Alibaba Cloud: https://www.alibabacloud.com/en/product/certificates

[4] Alibaba Cloud Certificate Documentation: https://www.alibabacloud.com/help/en/ssl-certificate/

- What is automq: Overview

- Difference with Apache Kafka

- Difference with WarpStream

- Difference with Tiered Storage

- Compatibility with Apache Kafka

- Licensing

- Deploy Locally

- Cluster Deployment on Linux

- Cluster Deployment on Kubernetes

- Example: Produce & Consume Message

- Example: Simple Benchmark

- Example: Partition Reassignment in Seconds

- Example: Self Balancing when Cluster Nodes Change

- Example: Continuous Data Self Balancing

-

S3stream shared streaming storage

-

Technical advantage

- Deployment: Overview

- Runs on Cloud

- Runs on CEPH

- Runs on CubeFS

- Runs on MinIO

- Runs on HDFS

- Configuration

-

Data analysis

-

Object storage

-

Kafka ui

-

Observability

-

Data integration