-

Notifications

You must be signed in to change notification settings - Fork 257

AutoMQ Authentication & Authorization Best Practices

Editor's Note: This article will further introduce the concepts of authentication and authorization in Kafka. [AutoMQ] is a next-generation Kafka fully compatible with Apache Kafka®, which can help users reduce Kafka costs by over 90% and achieve rapid auto-scaling. As a dedicated supporter of the Kafka ecosystem, we will continue to promote Kafka technology. Stay tuned for more information.

In our previous article "AutoMQ SASL Security Authentication Configuration Guide" [1], we introduced the SASL authentication protocol configurations for the Apache Kafka (referred to as Kafka) server and client. Moreover, in the "AutoMQ SSL Security Protocol Configuration Guide" [2], we detailed how to utilize SSL (TLS) to ensure secure communication in Kafka or AutoMQ. This article will further outline the authentication methods and authorization strategies in Kafka, and provide an example to illustrate how to enable authentication and authorization in a real-world application scenario involving a Kafka or AutoMQ cluster.

Note that this article assumes the Kafka cluster is operating in Kraft mode.

Let's first review the function of a listener. A listener is an entity that defines the listening address (domain name/IP + port) and security protocol for the Kafka server. Generally, we can use multiple listeners to set differentiated configurations:

-

the security protocol for communication between brokers, between the controller and brokers, and between clients and brokers.

-

Intranet (within LAN or VPC) access to brokers and external network access to brokers involve communication security protocols.

We can map listener names to security protocols. For instance, Kafka supports the following security protocols:

-

PLAINTEXT: Unauthenticated, plaintext protocol;

-

SSL: Communication encrypted with TLS;

-

SASL_PLAINTEXT: Authentication using SASL, but communication remains plaintext;

-

SASL_SSL: Authentication using SASL, and communication encrypted with TLS;

We can configure the use of the SASL_SSL protocol between the client and broker to ensure encryption, while using SASL_PLAINTEXT between cluster nodes to reduce CPU overhead from encryption and decryption.

listeners=EXTERNAL://:9092,BROKER://10.0.0.2:9094

advertised.listeners=EXTERNAL://broker1.example.com:9092,BROKER://broker1.local:9094

listener.security.protocol.map=EXTERNAL:SASL_SSL,BROKER:SASL_PLAINTEXT

inter.broker.listener.name=BROKER

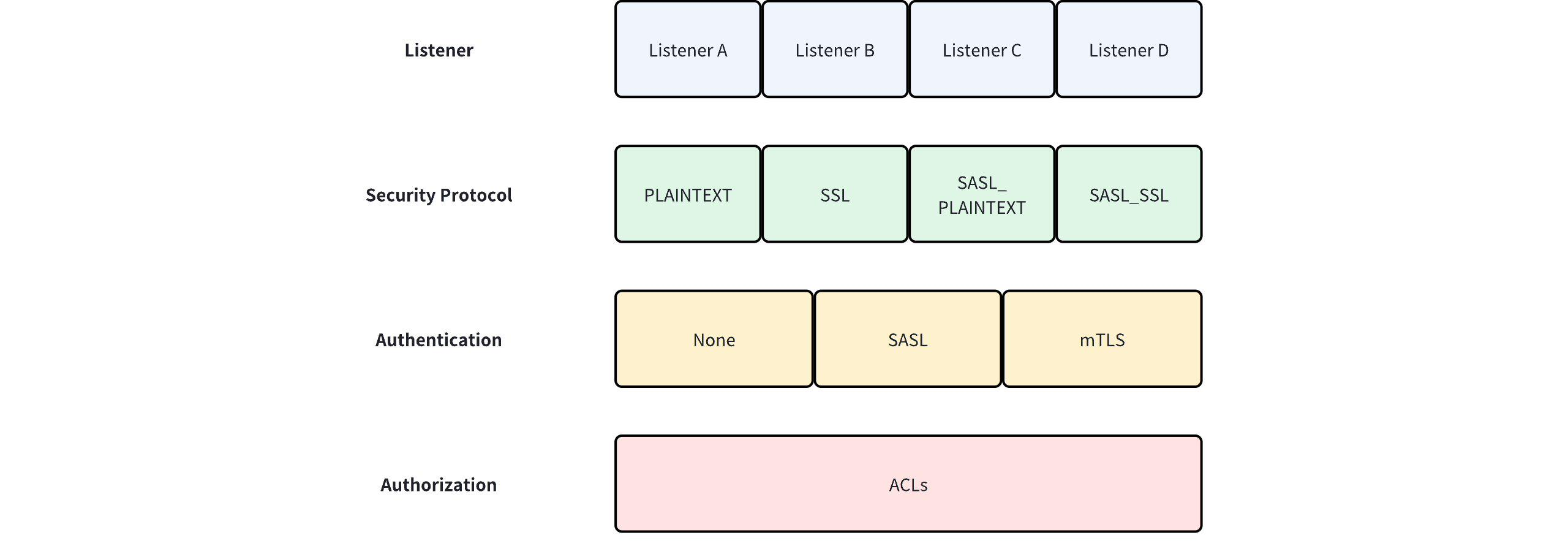

The following diagram illustrates the relationship between Listener, security protocols, authentication, and authorization.

The security protocol determines the authentication protocol between the server and the client. In contrast, authorization is mostly independent of authentication and only verifies whether the authenticated principal has permissions to perform operations on the resources involved in the request. The following sections will provide a detailed introduction to authentication methods and authorization rules.

First, let's briefly introduce the concept of a principal.

A principal is an entity that represents the client's identity, which corresponds to a KafkaPrincipal object [5]. After a client completes authentication through an authentication protocol, the broker will insert this KafkaPrincipal object into the RequestContext and pass it upstream for subsequent authorization. A KafkaPrincipal object mainly includes the principal type (currently, only the "User" type is available) and a name (which can be simply understood as the username declared by the client).

The mapping between security protocols and authentication protocols is as follows:

-

PLAINTEXT: No authentication;

-

SSL: No authentication/mTLS;

-

SASL_PLAINTEXT: SASL;

-

SASL_SSL: SASL;

To utilize mTLS for SSL authentication, you need to enable SSL verification on the broker side for the client.

ssl.client.auth=required

Identify the authentication entity using the "DistinguishedName" field in the certificate. Since authentication typically involves user management, this method of using certificates for authentication can be quite "cumbersome" when adding or removing users (especially in scenarios with dynamic user management), and thus it is not a mainstream authentication method.

The SASL authentication protocol can be further subdivided into the following mechanisms:

-

GSSAPI: Authentication through a third-party Kerberos (a ticket-based encryption authentication protocol) server.

-

PLAIN: Simple username/password authentication. Note that it is different from the PLAINTEXT concept mentioned earlier;

-

SCRAM-SHA-256/512: Based on the SCRAM algorithm, with self-authentication by Kafka nodes on a per-record basis;

-

OAUTHBEARER: Uses a third-party OAuth server for authentication;

Kafka broker allows simultaneous enabling of multiple SASL authentications, such as enabling SCRAM-SHA-256/512 + PLAIN at the same time.

sasl.enabled.mechanisms=SCRAM-SHA-256,PLAIN,SCRAM-SHA-512

The broker side also requires additional JAAS configuration, which has been mentioned in the "AutoMQ SASL Security Authentication Configuration Tutorial," so it will not be repeated here.

It is important to note that Apache Kafka provides a default SASL/PLAIN implementation, which requires an explicit declaration of the username and password information in each node's configuration. This static authentication approach is not conducive to dynamic user management. Dynamic capabilities can be achieved by integrating an external username and password authentication server.

Authentication is based on the principal, checking whether there is permission to operate on the requested resource.

Key configurations include:

-

authorizer.class.name: Specifies the authorizer class; the default is empty. In Kraft mode, you can use the officially provided "org.apache.kafka.metadata.authorizer.StandardAuthorizer" for authentication based on ACL (access control list) rules.

-

super.users: Sets super users; the default is empty. The format is User:{userName}. Users specified here will bypass ACL constraints and have all permissions on all operations and resources.

-

allow.everyone.if.no.acl.found: Defines default permissions when no ACL restrictions are found on a resource; the default is false. Setting this to false means only super users are allowed to operate, while setting it to true means any user is allowed to operate.

Kafka uses ACL (access control list) rules to restrict user access to resources. An ACL rule consists of two parts:

-

ResourcePattern: Defines the resource and its corresponding matching method, comprising:

-

ResourceType: The type of resource, including TOPIC, GROUP (consumer group), CLUSTER, etc.

-

ResourceName: The name of the resource.

-

PatternType: The matching method of the resource, including LITERAL (exact match) and PREFIXED (prefix match).

-

-

AccessControlEntry: Contains user restriction information, including:

-

Principal: The principal, essentially the username.

-

Host: The user's host address.

-

AclOperation: The operation behavior, such as Read, Write, CREATE, DELETE, etc.

-

AclPermissionType: The permission type, can be ALLOW or DENY.

-

In summary, these rules can be described as:

Allow or deny a user {Principal} from {Host} to perform {AclOperation} on a resource with {PatternType} pattern type and {ResourceName} name.

When both of the following conditions are met:

-

- An ACL exists that allows the user to perform the operation

-

- No ACL exists that denies the user from performing the operation

The user is allowed to perform the operation on the resource.

It is important to note that the wildcard "*" can be used as the content for ResourceName or Host. For the Host field, entering "*" means any address. If ResourceName is "*", and PatternType is LITERAL, it means matching any name; if ResourceName is "*", and PatternType is PREFIXED, it means matching resources with the prefix name "*".

Additionally, when ResourceType is CLUSTER, ResourceName can only be "kafka-cluster".

In certain situations, it is necessary to consider switching security protocols or enabling authentication for clusters that previously did not use authentication. For example, in a development environment, we typically use the default PLAINTEXT configuration for the cluster. If the unfortunate scenario arises where the PLAINTEXT protocol is used in testing or production environments, then upgrading the security protocol and enabling authorization becomes necessary.

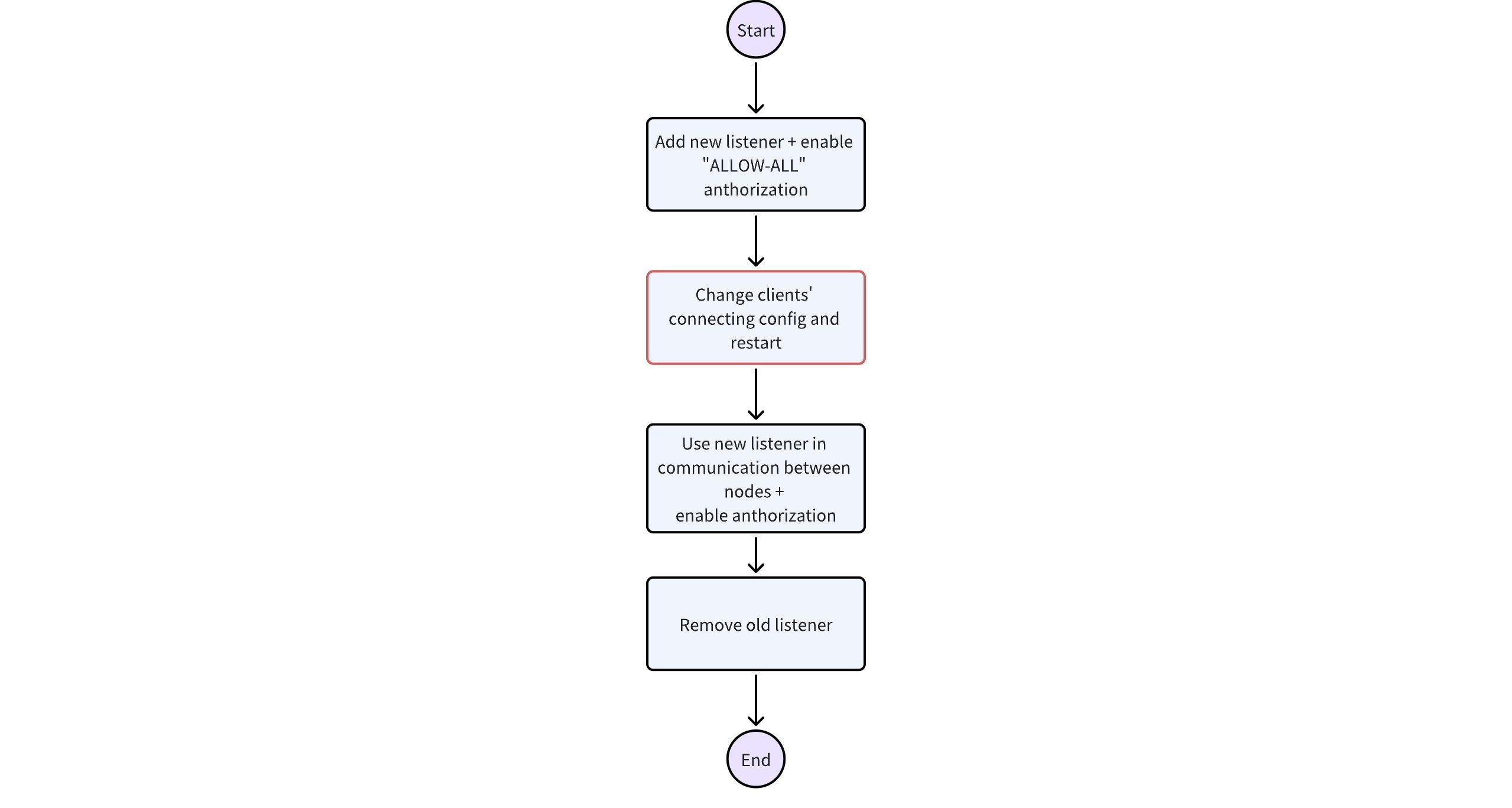

The following example illustrates a smooth transition from "PLAINTEXT, no authentication" to "SASL_PLAINTEXT, with authentication." Changes to other security protocols can follow a similar approach.

The scenario assumes that the three Kafka nodes are deployed on the same machine and distinguished by different ports. All three nodes serve as mixed nodes for both controller and broker roles. Note that each step in the change process requires a restart of each node.

Overall logical diagram:

The cluster requires three rounds of restarts, while the business side requires one round of restart.

The following are partial configurations for the first node under the PLAINTEXT protocol. The configurations for other nodes follow similarly:

node.id=1

controller.quorum.voters=1@localhost:9093,2@localhost:9095,3@localhost:9097

listeners=PLAINTEXT://:9092,CONTROLLER://:9093

inter.broker.listener.name=PLAINTEXT

advertised.listeners=PLAINTEXT://localhost:9092

controller.listener.names=CONTROLLER

listener.security.protocol.map=CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL

node.id=1

# No change

controller.quorum.voters=1@localhost:9093,2@localhost:9095,3@localhost:9097

# New listeners for controller and broker are online

listeners=PLAINTEXT://:9092,CONTROLLER://:9093,BROKER_SASL://:9192,CONTROLLER_SASL://:9193

# No change

inter.broker.listener.name=PLAINTEXT

# New addresses are online

advertised.listeners=PLAINTEXT://localhost:9092,BROKER_SASL://localhost:9192

# New listeners are online

controller.listener.names=CONTROLLER,CONTROLLER_SASL

# Authorization settings

authorizer.class.name=org.apache.kafka.metadata.authorizer.StandardAuthorizer

# Allow everyone to access resources

allow.everyone.if.no.acl.found=true

# Superuser for inter-node authentication

super.users=User:automq

sasl.enabled.mechanisms=SCRAM-SHA-256,PLAIN,SCRAM-SHA-512

# Specify the SASL mechanism for broker communication

sasl.mechanism.inter.broker.protocol=PLAIN

# Specify the SASL mechanism for controller communication

sasl.mechanism.controller.protocol=PLAIN

# Static username-password configuration

listener.name.broker_sasl.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required \

username="automq" \

password="automq-secret" \

user_automq="automq-secret";

listener.name.controller_sasl.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required \

username="automq" \

password="automq-secret" \

user_automq="automq-secret";

# Authentication module configuration

listener.name.broker_sasl.scram-sha-256.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required;

listener.name.controller_sasl.scram-sha-256.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required;

listener.name.broker_sasl.scram-sha-512.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required;

listener.name.controller_sasl.scram-sha-512.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required;

# Add new listeners to the security protocol mapping

listener.security.protocol.map=CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL,BROKER_SASL:SASL_PLAINTEXT,CONTROLLER_SASL:SASL_PLAINTEXT

Here, the setting “allow.everyone.if.no.acl.found” is set to true to ensure that online clients can authenticate properly, avoiding immediate authentication failures.

The setting “controller.listener.names” is set to “CONTROLLER, CONTROLLER_SASL”, indicating that the controller uses both listeners simultaneously, and the broker for this node will use the security protocol mapped to "CONTROLLER" to communicate with the controller.

After completing this phase, it is necessary to notify the business units to reconfigure clients based on the SASL_PLAINTEXT configuration. Concurrently, it’s important to configure the necessary ACL rules for each business unit.

After executing the previous phase, the listener with the new protocol is already online and can provide services externally. However, the communication between cluster nodes still uses the original protocol. In this phase, we will upgrade the internal communication:

node.id=1

# Use the new controller ports

controller.quorum.voters=1@localhost:9193,2@localhost:9195,3@localhost:9197

# No change

listeners=PLAINTEXT://:9092,CONTROLLER://:9093,BROKER_SASL://:9192,CONTROLLER_SASL://:9193

# Use the new protocol

inter.broker.listener.name=BROKER_SASL

# Use the new addresses

advertised.listeners=PLAINTEXT://localhost:9092,BROKER_SASL://localhost:9192

# Note the change in order

controller.listener.names=CONTROLLER_SASL,CONTROLLER

# Authorization settings

authorizer.class.name=org.apache.kafka.metadata.authorizer.StandardAuthorizer

# allow.everyone.if.no.acl.found=true

super.users=User:automq

# No change

sasl.enabled.mechanisms=SCRAM-SHA-256,PLAIN,SCRAM-SHA-512

sasl.mechanism.inter.broker.protocol=PLAIN

sasl.mechanism.controller.protocol=PLAIN

listener.name.broker_sasl.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required \

username="automq" \

password="automq-secret" \

user_automq="automq-secret";

listener.name.controller_sasl.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required \

username="automq" \

password="automq-secret" \

user_automq="automq-secret";

# No change

listener.name.broker_sasl.scram-sha-256.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required;

listener.name.controller_sasl.scram-sha-256.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required;

listener.name.broker_sasl.scram-sha-512.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required;

listener.name.controller_sasl.scram-sha-512.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required;

# No change

listener.security.protocol.map=CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL,BROKER_SASL:SASL_PLAINTEXT,CONTROLLER_SASL:SASL_PLAINTEXT

Note that the configuration "allow.everyone.if.no.acl.found" is commented out, meaning that arbitrary operations on resources by anyone are no longer allowed by default.

node.id=1

# No change

controller.quorum.voters=1@localhost:9193,2@localhost:9195,3@localhost:9197

# Remove old listeners

listeners=BROKER_SASL://:9192,CONTROLLER_SASL://:9193

# No change

inter.broker.listener.name=BROKER_SASL

# Remove old advertised address

advertised.listeners=BROKER_SASL://localhost:9192

# Remove old listener

controller.listener.names=CONTROLLER_SASL

# Authorization settings

authorizer.class.name=org.apache.kafka.metadata.authorizer.StandardAuthorizer

# allow.everyone.if.no.acl.found=true

super.users=User:automq

# No change

sasl.enabled.mechanisms=SCRAM-SHA-256,PLAIN,SCRAM-SHA-512

sasl.mechanism.inter.broker.protocol=PLAIN

sasl.mechanism.controller.protocol=PLAIN

listener.name.broker_sasl.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required \

username="automq" \

password="automq-secret" \

user_automq="automq-secret";

listener.name.controller_sasl.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required \

username="automq" \

password="automq-secret" \

user_automq="automq-secret";

# No change

listener.name.broker_sasl.scram-sha-256.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required;

listener.name.controller_sasl.scram-sha-256.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required;

listener.name.broker_sasl.scram-sha-512.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required;

listener.name.controller_sasl.scram-sha-512.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required;

# No change

listener.security.protocol.map=CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL,BROKER_SASL:SASL_PLAINTEXT,CONTROLLER_SASL:SASL_PLAINTEXT

At this point, the old protocol is phased out, and all communication is based on the SASL_PLAINTEXT protocol.

This article provides an overview of authentication protocols and authorization strategies in Kafka. It begins with an introduction to the mapping between listeners and security protocols, as well as the mapping between security protocols and authentication methods. Next, it details the various authentication protocols supported in Kafka, along with ACL authorization strategies. Upon successful authentication, Kafka generates a principal for fine-grained authorization at the application layer. Finally, it discusses how to upgrade the authentication protocol and enable authorization in a running Kafka cluster.

[1] https://www.automq.com/blog/automq-sasl-security-authentication-configuration-guide

[2] https://www.automq.com/blog/automq-ssl-security-protocol-configuration-tutorial

[3] https://developer.confluent.io/courses/security/authentication-basics/

- What is automq: Overview

- Difference with Apache Kafka

- Difference with WarpStream

- Difference with Tiered Storage

- Compatibility with Apache Kafka

- Licensing

- Deploy Locally

- Cluster Deployment on Linux

- Cluster Deployment on Kubernetes

- Example: Produce & Consume Message

- Example: Simple Benchmark

- Example: Partition Reassignment in Seconds

- Example: Self Balancing when Cluster Nodes Change

- Example: Continuous Data Self Balancing

-

S3stream shared streaming storage

-

Technical advantage

- Deployment: Overview

- Runs on Cloud

- Runs on CEPH

- Runs on CubeFS

- Runs on MinIO

- Runs on HDFS

- Configuration

-

Data analysis

-

Object storage

-

Kafka ui

-

Observability

-

Data integration