This project is a FastAPI-based Chatbot AI application that provides an API for uploading PDF files, extracting text, generating embeddings, and answering questions based on the uploaded documents using open-source LLM models.

You can find a comprehensive slide presentation for this project in the following link:

https://docs.google.com/presentation/d/1jmwY-pLP_y-Qibolwzeee7xuLOtJHgtq1Ib89h1QIOU/edit?usp=sharing

The slide presentation is a valuable resource for understanding the intricacies of the project. It is especially useful for:

- Developers: Looking to contribute or integrate the API into their applications.

- Stakeholders: Interested in the technical and functional aspects of the project.

- Educators and Students: Who want to learn about building AI-powered applications using open-source tools.

We encourage you to review the presentation to gain deeper insights into the project's capabilities, architecture, and potential applications.

- OpenRAG API Demo

- Upload PDFs: Upload PDF files and extract text content.

- Generate Embeddings: Create text embeddings using Sentence Transformers.

- Question Answering: Answer questions based on the uploaded documents using an open-source LLM model.

- Database Storage: Store extracted texts and embeddings in a PostgreSQL database with vector support.

- API Authentication: Secure API endpoints using an API key.

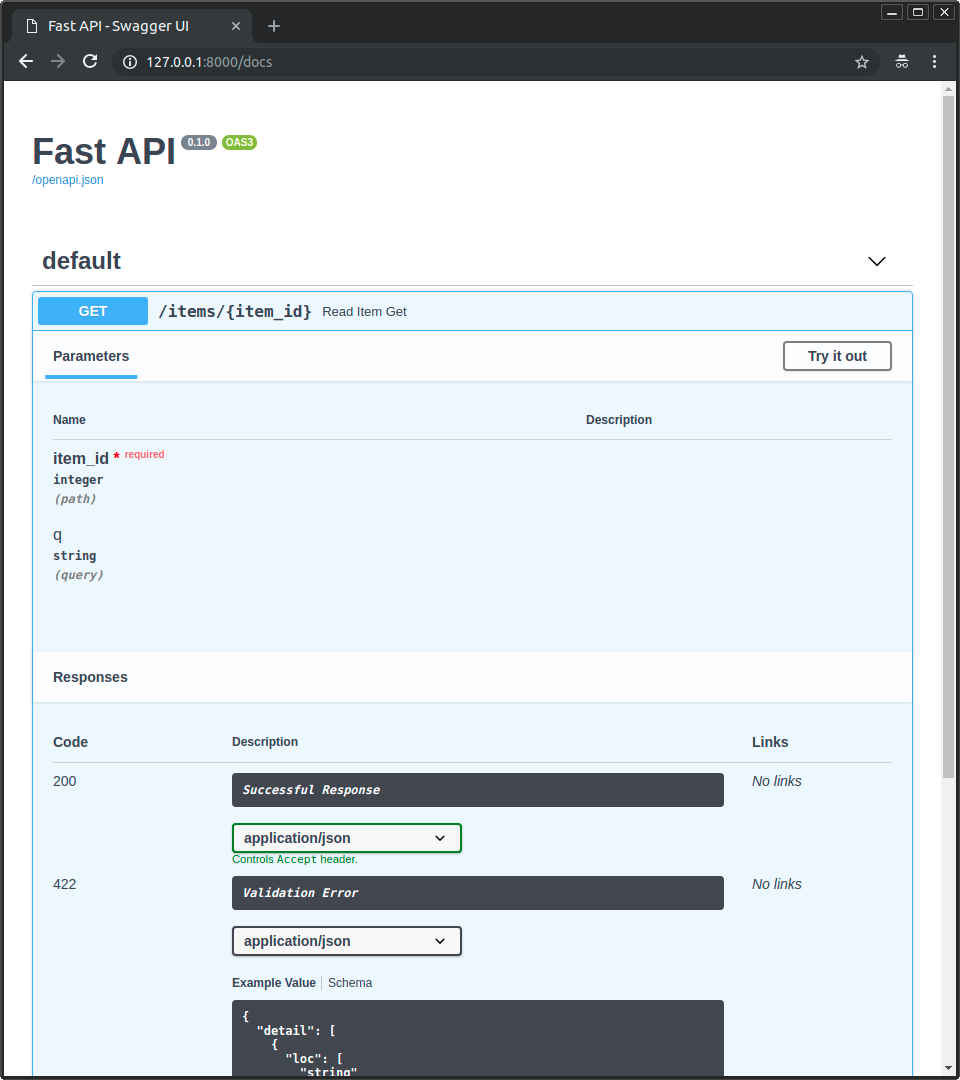

- Interactive Documentation: Auto-generated API docs accessible through

/docsendpoint.

- Python: Version 3.11.11

- PostgreSQL: With

vectorextension enabled. - uv: Used as the package and project manager.

- GPU (Optional): CUDA-enabled GPU for faster model inference.

git clone /~https://github.com/meetap/open-rag-api-demo.git

cd open-rag-api-demoEnsure you have uv installed:

pip install uvCreate a virtual environment and activate it:

uv venv create

uv venv activatepython -m venv venv

source venv/bin/activate # On Windows use `venv\Scripts\activate`Using uv:

uv pip install -e .Or if you use poetry you can install them with:

poetry installInstall the required models for embeddings and the LLM:

pip install sentence-transformers transformers torchCopy the sample environment file and modify it:

cp .env.sample .envEdit .env with your configurations:

DB_HOST=your_db_host

DB_NAME=your_db_name

DB_USER=your_db_user

DB_PASSWORD=your_db_password

EMBEDDING_MODEL_NAME=sentence-transformers/paraphrase-MiniLM-L6-v2

LLM_MODEL_NAME=google/flan-t5-base

MAX_PROMPT_LENGTH=2048

SERVICE_API_KEY=your_service_api_keyEnsure PostgreSQL is installed and running. Create a database and enable the vector extension:

CREATE DATABASE your_db_name;

\c your_db_name;

CREATE EXTENSION IF NOT EXISTS vector;- Database Configuration: Update the database credentials in the

.envfile. - Model Configuration: Set your preferred models in the

.envfile. - API Key: Set a secure

SERVICE_API_KEYin the.envfile for API authentication.

Start the FastAPI application using uvicorn:

uvicorn main:app --reloadThe application will be accessible at http://127.0.0.1:8000.

- Endpoint:

/upload - Method:

POST - Authentication: Bearer Token (

SERVICE_API_KEY) - Content-Type:

multipart/form-data - Parameter:

file(required): The PDF file to upload.

Sample Request using curl:

curl -X POST "http://127.0.0.1:8000/upload" \

-H "Authorization: Bearer your_service_api_key" \

-F "file=@/path/to/your/file.pdf"Response:

{

"status": "success",

"message": "PDF uploaded and processed successfully"

}-

Endpoint:

/ask -

Method:

POST -

Authentication: Bearer Token (

SERVICE_API_KEY) -

Content-Type:

application/json -

Body:

{ "question": "Your question here" }

Sample Request using curl:

curl -X POST "http://127.0.0.1:8000/ask" \

-H "Authorization: Bearer your_service_api_key" \

-H "Content-Type: application/json" \

-d '{"question": "What is the main topic of the uploaded document?"}'Response:

{

"data": {

"question": "What is the main topic of the uploaded document?",

"answer": "The main topic is...",

"file_names": ["file.pdf"]

},

"status": "success",

"message": "success"

}FastAPI provides interactive API documentation automatically generated from your code.

Navigate to http://127.0.0.1:8000/docs to access the Swagger UI, an interactive interface to test your API endpoints.

Alternatively, visit http://127.0.0.1:8000/redoc for ReDoc documentation.

- Swagger UI: http://127.0.0.1:8000/docs

- ReDoc: http://127.0.0.1:8000/redoc

These interfaces allow you to explore and test the API endpoints with ease.

open-rag-api-demo/

├── main.py # Main application file.

├── pyproject.toml # Project dependencies and metadata.

├── .env.sample # Sample environment configuration.

├── .python-version # Python version specification.

├── LICENSE # License file (MIT License).

└── README.md # Project documentation.- fastapi: Web framework for building APIs with Python.

- uvicorn: ASGI server for running FastAPI applications.

- psycopg2: PostgreSQL database adapter.

- numpy: Library for numerical computations.

- torch: PyTorch library for machine learning models.

- sentence-transformers: Library for easy use of Transformer models for encoding sentences.

- transformers: State-of-the-art Machine Learning for Pytorch and TensorFlow.

- pdfplumber: Library for extracting information from PDF files.

- python-multipart: Required for form data parsing in FastAPI.

All dependencies are specified in the pyproject.toml file.

This project is licensed under the MIT License.

- FastAPI: For providing an easy-to-use web framework.

- Hugging Face: For the Transformers and Sentence Transformers libraries.

- OpenAI: For advancements in AI models and research.

- pdfplumber: For simplifying PDF text extraction.

- Community Contributors: For their continuous support and contributions.