Tensorflow implementation of Deep Convolutional Generative Adversarial Networks which is a stabilize Generative Adversarial Networks. The referenced torch code can be found here.

- Brandon Amos wrote an excellent blog post and image completion code based on this repo old repo.

- To avoid the fast convergence of D (discriminator) network, G (generator) network is updated twice for each D network update, which differs from original paper.

Download your dataset and put it in directory. The data should be identical in channel - RGB (3 channels). Image dimensions must be at least 32x32. If your images dimension is higher than 32, but has arbitrary/random dimensions, you need to make sure that they are multiple of 32. To do this, you can pass --crop True while training; and set the --output-height multiple-of-32-e.g. 32, 64, 96, 128 - make sure passed output-height is less than passed input-height.

For custom dataset, put your data into the directory named after your data e.g. --dataset landscape-data and make sure this directory is present under the --data-dir argument. e.g. Expected structure of data could be following if you passed --data-dir ./data and --dataset celebA; then ./data/celebA/*.jpg. Also don't forget to include --input-fname-pattern argument. This is the image type (extension) of images under ./data/celebA/.

For saving best models, checkpoints, output samples during training and loss graphs, you need to setup an directory for it. You can pass --out-dir as a parent of all of this data. Structure would be as below:

--out-dire.g../output- code will create a nested directory named after--dataset/current-date-timein it. So, we will have a separate out directory for all different dataset. e.g../output/landscape-data/2023-06-13 06:10:11->--out-dir/--dataset/current_date_time.--sample-dire.g.samples- code will create a directory named after--sample-dirinside--out-dir. This will further have some fixed nested directories e.g.output/landscape-pictures/2023-07-05 16:15:56/samples/lossessave loss graph and csvoutput/landscape-pictures/2023-07-05 16:15:56/samples/media/collagesave collage during training at each epochoutput/landscape-pictures/2023-07-05 16:15:56/samples/media/epochsave 3 individual images at every epoch

--checkpoint-dire.g.checkpoints- directory under--out-dir/--dataset/current_date_time/to store model during training.--checkpoint-prefixe.g.checkpointstarting name prefix to save models in--checkpoint-dir.--sample-freqe.g.100wait of this much iteration (batch counts) to get sample from model during training

--z-dime.g.100- noise length to seed generator for image generation--generate-test-imagee.g.100- Images to generate during testing--visualizee.g.Trueto make a gif of generated images - works only for RGB images--load-best-model-onlye.g.True- if True, during testing, load best model from--checkpoint-dir/best_model*other wise load latest model from--checkpoint-dir/checkpoint*.--traine.g.True- True for training, False for testing--retraine.g.Trueif retraining from some previously trained checkpoints, else False--load-model-dire.goutput/landscape-pictures/2023-07-05 16:15:56/if--retrainis True--load-model-prefixe.g.checkpoint- model name prefix under--load-model-dir--batch-sizee.g.4--g-learning-ratee.g.0.001--d-learning-ratee.g.0.001--beta1e.g.0.5momentum term forAdam

To train a model with custom self-downloaded datasets:

!python3 main.py --train True --dataset "landscape-data" --data-dir "data" --crop True --output-height 256 --output-width 256\

--input-width 256 --input-height 256\

--c-dim 3 --batch-size 4 --epoch 50 --logging-frequency 500 \

--g-learning-rate 0.0005 --d-learning-rate 0.0005To retrain a model that is trained previously as well

!python3 main.py --retrain True --dataset "$dataset_name" --data-dir "$path_to_dataset" --crop True \

--output-height 256 --output-width 256 --input-width 256 --input-height 256\

--c-dim 3 --batch-size 4 --epoch 50 --logging-frequency 500 \

--g-learning-rate 0.00005 --d-learning-rate 0.00005 --load-model-prefix checkpoint

--load-model-dir "output/landscape-pictures/2023-07-05 16:15:56/"To test with an existing model:

!python3 main.py --dataset "landscape-data" --data-dir "data" --crop True \

--output-height 256 --output-width 256 --input-width 256 --input-height 256\

--c-dim 3 --batch-size 4 --epoch 200 --logging-frequency 500 \

--g-learning-rate 0.001 --d-learning-rate 0.001

--checkpoint-dir "output/landscape-pictures/2023-07-05 17:49:03/"

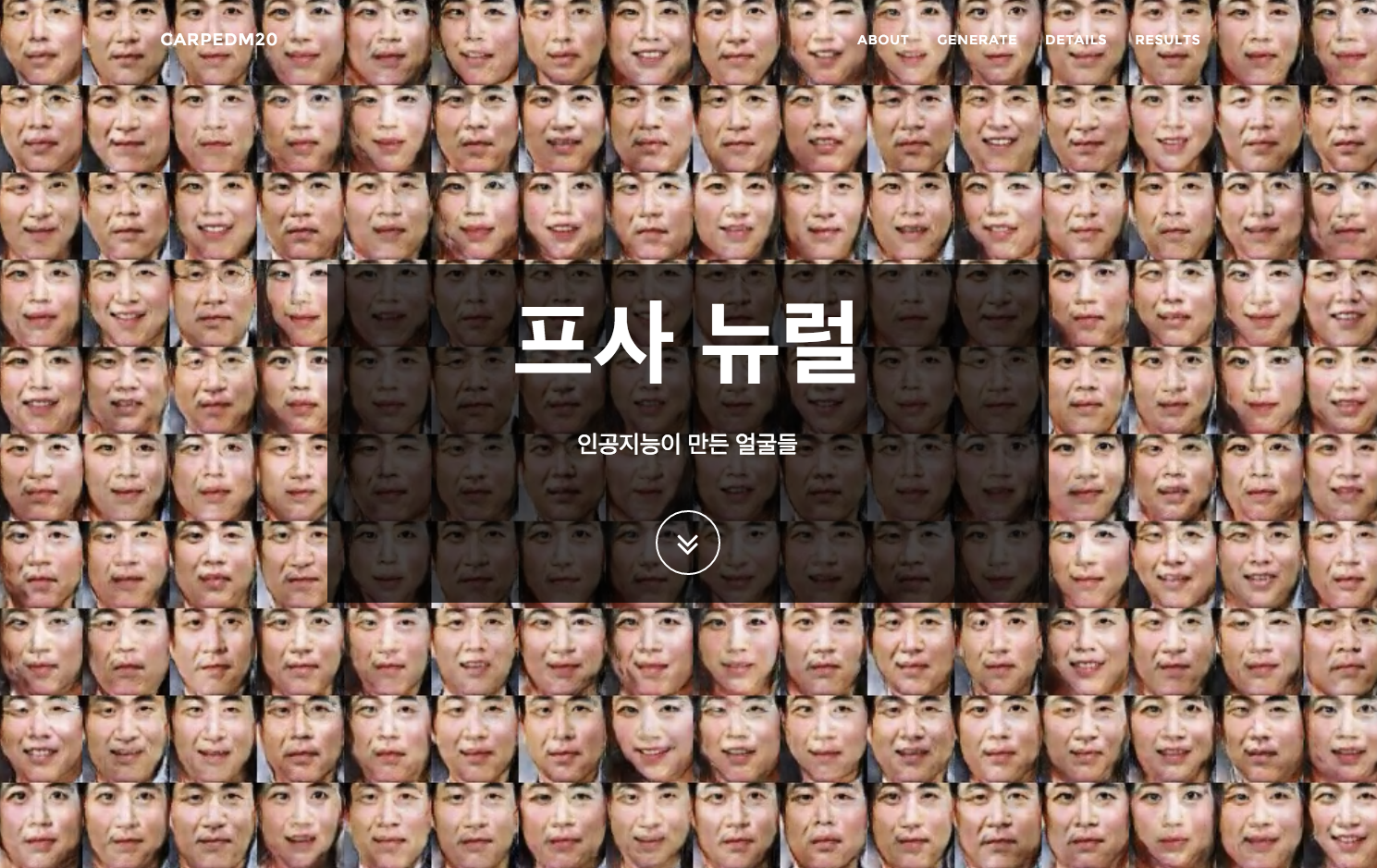

After 6th epoch:

After 10th epoch:

More results can be found here and here.

Details of the loss of Discriminator and Generator (with custom dataset not celebA).

Details of the histogram of true and fake result of discriminator (with custom dataset not celebA).

MasterHM / @MasterHM-ml