This repo is based on the laughter detection model by Gillick et al. and retrains it on the ICSI Meeting corpus

The data pipeline uses Lhotse, a new Python library for speech and audio data preparation.

This repository consists of three main parts:

- Evaluation Pipeline

- Data Pipeline

- Training Code

The following list outlines which parts of the repository belong to each of them and classifies the parts/files as one of three types:

from scratch: entirely written by myselfadapted: code taken from Gillick et al. and adaptedunmodified: code taken from Gillick et al. and not adapted or modified

-

Evalation Pipeline (from scratch):

analysistranscript_parsing/parse.py+preprocess.py: parsing and preprocessing the ICSI transcriptsanalyse.py: main function, that parses and evaluates predictions from .TextGrid files output by the modeloutput_processing: scripts for creating .wav files for the laughter occurrences to manually evaluate them

visualise.py: functions for visualising model performance (incl. prec-recall curve and confusion matrix)

-

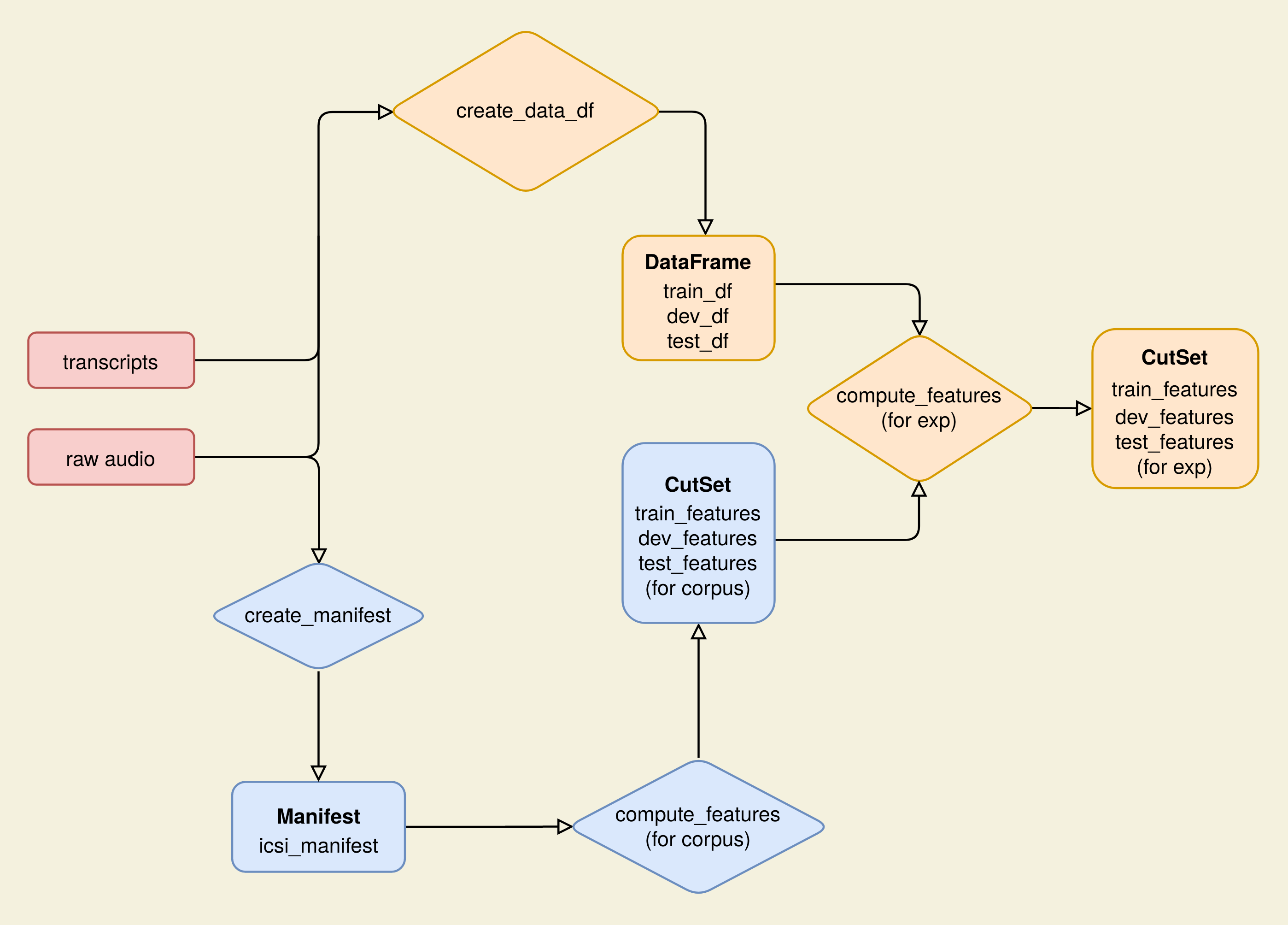

Data Pipeline (from scratch) - also see diagram:

compute_features: computes feature representing the whole corpus and specific subsets of the ICSI corpuscreate_data_df.py: creates a dataframe representing training, development and test-set

-

Training Code:

models.py(unmodified): defines the model architecturetrain.py(adapted): main training codesegment_laughter.py+laugh_segmenter.py(adpated): inference code to run laughter detection on audio filesdatasets.py+load_data.py(from scratch): the new LAD (Laugh Activity Detection) Dataset + new inference Dataset and code for their creation

-

Misc:

Demo.ipynb(from scratch): demonstration of using Lhotse to compute features from a dataframe defining laughter and non-laughter segmentsconfig.py(adapted): configurations for different parts of the pipelineresults.zip(N/A): contains the model predictions from experiments presented in my thesis

Steps to get the environment setup from scratch such that training and evaluation can be run

-

Clone this repo

-

cdinto the repo -

create a python env and install all packages listed below. Put them in a

requirments.txtfile and runpip install -r requirments.txt -

we use Lhotse's available recipe for the ICSI-corpus to download the corpus' audio + transcripts

- run the python script

get_icsi_data.py- this will take a while to complete - it downloads all audio and transcriptions for the icsi corpus

- after completion

- you should have a

data/icsi/speechfolder with all your audio files grouped by meeting - you should have a

data/icsi/transcriptsfolder with all the.mrttranscripts

- you should have a

- run the python script

-

Now create a

.envfile by copying the.sample.env-file to an.envfile.

- you can configure the folders to match your desired folder structure

- Now you run

compute_features.pyonce to compute the features for the whole corpus

- the first time this will parse the transcripts and create indices with laughter and non-laughter segments (see

Other documentationsection below).- This will take a while (e.g. it took one hour for me)

- after initial creation the indices are cached and they are loaded from disk

- This will take a while (e.g. it took one hour for me)

- that's done by the

compute_features_per_split()method in the main() function - you can comment out the call to

compute_features_for_cuts()in the main() function if you just want to create the features for the whole corpus for now

- Then you run

create_data_dfto create a set of training samples - Then you need to run

compute_features.pyto create the cutset

- this is done by the

compute_features_for_cuts()function in the main() function

-

parse.py:-

functions for creating dataframes each containing all audio segments of a certain type (e.g. laughter, speech, etc.) - one per row. The columns for all these "segment dataframes" are the same

- Columns: ['meeting_id', 'part_id', 'chan_id', 'start', 'end', 'length', 'type', 'laugh_type']

-

Additionally

parse.pycreates one other dataframe, calledinfo_df. This dataframe contains general information about each meeting.- Columns of

info_df: ['meeting_id', 'part_id', 'chan_id', 'length', 'path']

- Columns of

-

-

preproces.py: functions for creating all the indices. An index in this context is a nested mapping. Each index maps a participant in a certain meeting to all transcribed "audio segments" of a certain type (e.g. laughter, speech, etc.) recorded by this participant's microphone. The "audio segments" are taken from the dataframes created inparse.py. Each segment is turned into an "openclosed"-interval which is a datatype provided by the Portion library (TODO link portion library). These intervals are joined into a single disjunction. Portion allows normal interval operations on such disjunctions which simplifies future logic, e.g. detecting whether predictions overlap with transcribed events.

All indices follow the same structure. They are defined as python dictionary of the following shape:

{

meeting_id: {

tot_len: INT,

tot_events: INT,

part_id: P.openclosed(start,end) | P.openclosed(start,end),

part_id: P.openclosed(start,end) | P.openclosed(start,end)

...

}

meeting_id: {

...

}

...

}