-

Notifications

You must be signed in to change notification settings - Fork 114

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Creation of MediaStreamTrack from raw PCM stream; Example 18 with a JSON file instead of messaging #2570

Comments

Signaling is out of scope but there are other resources and tutorial in the web that could help you.

WebAudio is designed for that purpose. |

|

We have discussed providing mechanisms for accessing/transforming/encoding raw media, as part of WebRTC-NV. But currently, WebAudio is the most straightforward way to create a MediaStreamTrack from PCM audio. |

|

WebAudio with |

And yet there is a signaling example at the specification, yet the example is incomplete. This is the precise case:

In order to avoid using JavaScript Instead we should be able to create a WebAudio has no standardized means to accept dynamic live streams of binary data, besides In this case the closest we can get is using Thus, the three related issues where, with a common interest, it is certainly possible to write out a specification to convert raw audio binary data to a |

The reason that specifically asked for signaling example completion as the goal is not to use Chromium extension messaging through Instead, to avoid attempting to pass messages generated asynchronously in a synchronous messaging API, once gather the exact required flow-chart, will experiment with writing and reading SDP as JSON to a single file, in an array of objects, using Native File System at the browser and |

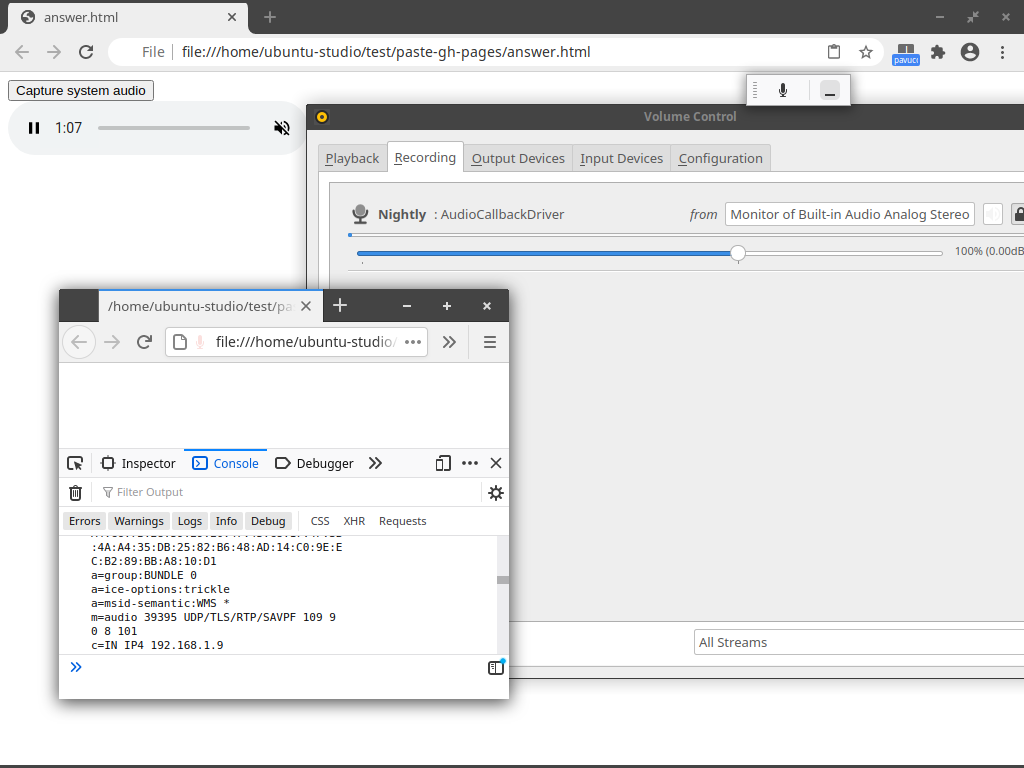

The signaling portion of the Issue is solved. After re-reading and manually performing the paste at /~https://github.com/fippo/paste several dozen times gather the minimal requirements. The use case in this instance is capture and streaming of monitor devices at Chromium browser, which refuses to support capture or listing of monitor devices at Linux with Firefox does support capture of monitor devices at Linux. One solution to achieve the requirement of capturing monitor device at Chromium, or at least gaining access to the device, by any means, is to perform the capture of the device at Nightly, then establish an WebRTC connection with Chromium to access the The use of clipboard is not ideal. Used here to automate the procedure to the extent possible, where Chromium requires focus on the document to read and write to At Nightly set flags at Chromium TODO: Improve signaling method. Establish |

|

Overtaken by Events (proposals for new raw media APIs). |

To workaround Chromium refusal to support exposure and capture of monitor devices for

getUserMedia()at Linux have created several workarounds /~https://github.com/guest271314/captureSystemAudio/ that, from perspective here, could be simplified.Am able to get a

ReadableStreamwhere the value when read is raw PCM audio data, 2 channel, 44100 sample rate, (s16le).The current version uses browser extension and Native Messaging to

fetch()from localhost where output is the monitor device data passed throughPHPpassthru(), then due to Chromium extension messaging code not supporting transfer, converted to text, then messaged to a different origin.There has to be a simpler way to do this.

Two options occur, though there are certainly other options that perhaps have not conceived of yet, thus this question

MediaStreamTrackdirectly from raw PCM input and somehow get thatMediaStreamTrackexposed at a different originOption 1. is probably more involved, though avoids using

WebAssembly.Memory,SharedArrayBuffer,ArrayBuffer,TypedArrays, which have limitations both by default design and architechture, https://bugs.chromium.org/p/v8/issues/detail?id=7881#c60That limitation is observable at 32-bit systems wasmerio/wasmer-php#121 (comment) where when attempting to dynamically use

WebAssembly.Memory.grow(1)when the currentvalue(Uint8Array) plus previously written values exceed initial or currentSharedArrayBufferbyteLengthultimately allocation of increased memory can not succeed; e.g., trying to capture 30 minutes of audio (which is written to memory, while memory grows dynamically) can result in only 16 minutes and 43 seconds of audio being recorded.Option 2. is probably the simplest in this case. Somehow create a

MediaStreamTrackfrom raw PCM input, then write and read offer and answer, and "ICE", negotiation, et al. using a local file (JSON) in order to avoid using JavaScriptArrayBuffer,SharedArrayBuffer,WebAssembly.Memory.grow(growNPages)at all.However, to achieve that am asking the precise flow-chart of exchanges of offer and answer between two

RTCPeerConnections, as the instances will be on different origins, and Chromium extension messaging could occasionally require reloading the extension, using Natuive File System to write and read the file(s) bypasses the need to use messaging which requires communication between Native Messsaging host, Chromium extension and arbitrary web page.Can the specification be updated with an example of performing the complete necessary steps to establish peer connection for both sides of the connection, taking Example 18 https://w3c.github.io/webrtc-pc/#example-18 as the base case, using a single JSON file, or multiple files if needed, instead of signaling (messaging)?

In this case the extension code will make to send-only offer and the arbitrary web page will make the receive-only answer.

Ideally, we should be able to somehow just pass the raw PCM to a method of

RTCPeerConnection, et al. and not use Web Audio APIAudioWorkletorTypedArrayorArrayBufferat all. Is that possible?Alternatively, are there any other ways to solve this problem that am not considering?

The text was updated successfully, but these errors were encountered: