diff --git a/README.md b/README.md

index 84bde9ce1..5678662c2 100644

--- a/README.md

+++ b/README.md

@@ -34,8 +34,14 @@ This repository hosts the code of LightRAG. The structure of this code is based

+

+

+

+

+

+

+

+

+

+ 🎉 News

+

-## 🎉 News

- [x] [2025.02.05]🎯📢Our team has released [VideoRAG](/~https://github.com/HKUDS/VideoRAG) understanding extremely long-context videos.

- [x] [2025.01.13]🎯📢Our team has released [MiniRAG](/~https://github.com/HKUDS/MiniRAG) making RAG simpler with small models.

@@ -54,12 +60,20 @@ This repository hosts the code of LightRAG. The structure of this code is based

- [x] [2024.10.16]🎯📢LightRAG now supports [Ollama models](/~https://github.com/HKUDS/LightRAG?tab=readme-ov-file#quick-start)!

- [x] [2024.10.15]🎯📢LightRAG now supports [Hugging Face models](/~https://github.com/HKUDS/LightRAG?tab=readme-ov-file#quick-start)!

-## Algorithm Flowchart

+

+

+

+

+ Algorithm Flowchart

+

+

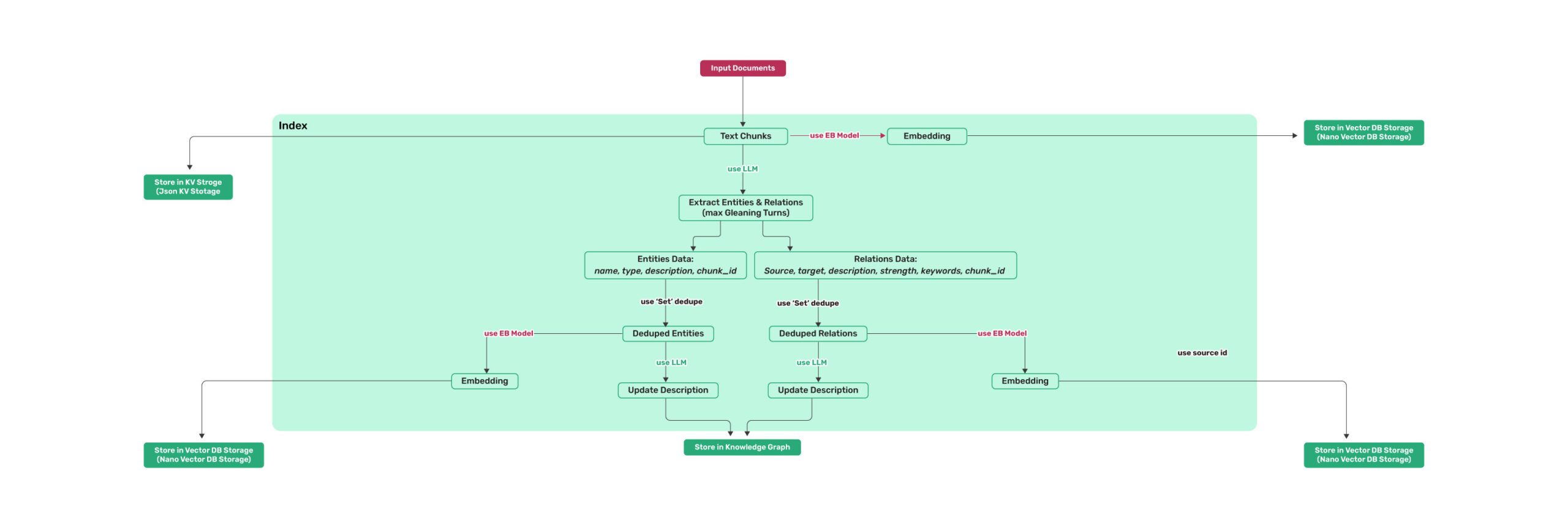

*Figure 1: LightRAG Indexing Flowchart - Img Caption : [Source](https://learnopencv.com/lightrag/)*

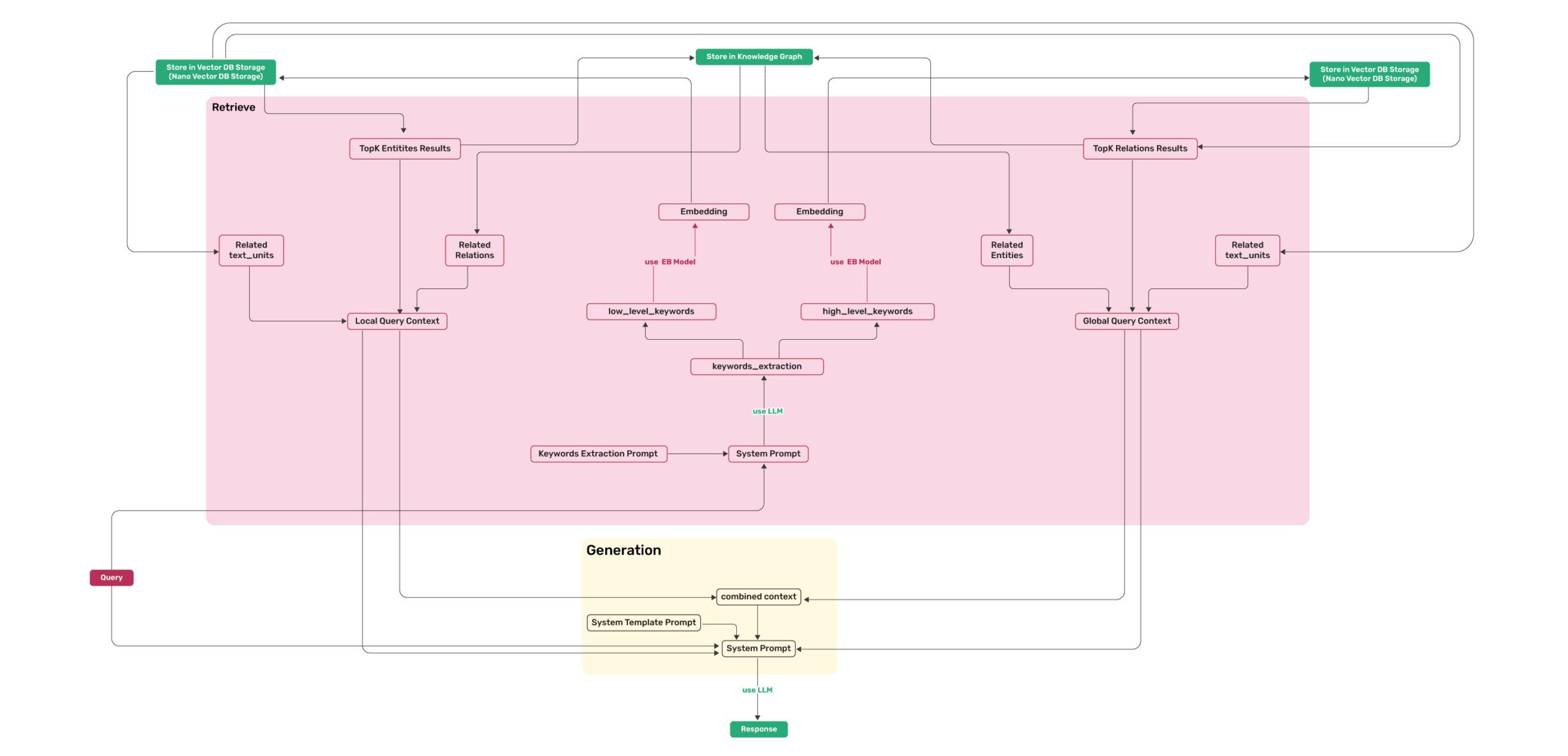

*Figure 2: LightRAG Retrieval and Querying Flowchart - Img Caption : [Source](https://learnopencv.com/lightrag/)*

+

+

## Install

* Install from source (Recommend)

@@ -81,6 +95,9 @@ pip install lightrag-hku

```bash

curl https://raw.githubusercontent.com/gusye1234/nano-graphrag/main/tests/mock_data.txt > ./book.txt

```

+

+## Query

+

Use the below Python snippet (in a script) to initialize LightRAG and perform queries:

```python

@@ -88,124 +105,67 @@ import os

from lightrag import LightRAG, QueryParam

from lightrag.llm.openai import gpt_4o_mini_complete, gpt_4o_complete, openai_embed

-#########

-# Uncomment the below two lines if running in a jupyter notebook to handle the async nature of rag.insert()

-# import nest_asyncio

-# nest_asyncio.apply()

-#########

-

-WORKING_DIR = "./dickens"

-

-if not os.path.exists(WORKING_DIR):

- os.mkdir(WORKING_DIR)

-

rag = LightRAG(

- working_dir=WORKING_DIR,

+ working_dir="your/path",

embedding_func=openai_embed,

- llm_model_func=gpt_4o_mini_complete # Use gpt_4o_mini_complete LLM model

- # llm_model_func=gpt_4o_complete # Optionally, use a stronger model

+ llm_model_func=gpt_4o_mini_complete

)

-with open("./book.txt") as f:

- rag.insert(f.read())

+# Insert text

+rag.insert("Your text")

# Perform naive search

-print(rag.query("What are the top themes in this story?", param=QueryParam(mode="naive")))

-

+mode="naive"

# Perform local search

-print(rag.query("What are the top themes in this story?", param=QueryParam(mode="local")))

-

+mode="local"

# Perform global search

-print(rag.query("What are the top themes in this story?", param=QueryParam(mode="global")))

-

+mode="global"

# Perform hybrid search

-print(rag.query("What are the top themes in this story?", param=QueryParam(mode="hybrid")))

-

-# Perform mix search (Knowledge Graph + Vector Retrieval)

-# Mix mode combines knowledge graph and vector search:

-# - Uses both structured (KG) and unstructured (vector) information

-# - Provides comprehensive answers by analyzing relationships and context

-# - Supports image content through HTML img tags

-# - Allows control over retrieval depth via top_k parameter

-print(rag.query("What are the top themes in this story?", param=QueryParam(

- mode="mix")))

-```

-

-### Conversation History Support

-LightRAG now supports multi-turn dialogue through the conversation history feature. Here's how to use it:

-

-```python

-from lightrag import LightRAG, QueryParam

+mode="hybrid"

+# Mix mode Integrates knowledge graph and vector retrieval.

+mode="mix"

-# Initialize LightRAG

-rag = LightRAG(working_dir=WORKING_DIR)

-

-# Create conversation history

-conversation_history = [

- {"role": "user", "content": "What is the main character's attitude towards Christmas?"},

- {"role": "assistant", "content": "At the beginning of the story, Ebenezer Scrooge has a very negative attitude towards Christmas..."},

- {"role": "user", "content": "How does his attitude change?"}

-]

-

-# Create query parameters with conversation history

-query_param = QueryParam(

- mode="mix", # or any other mode: "local", "global", "hybrid"

- conversation_history=conversation_history, # Add the conversation history

- history_turns=3 # Number of recent conversation turns to consider

-)

-

-# Make a query that takes into account the conversation history

-response = rag.query(

- "What causes this change in his character?",

- param=query_param

+rag.query(

+ "What are the top themes in this story?",

+ param=QueryParam(mode=mode)

)

```

-### Custom Prompt Support

-LightRAG now supports custom prompts for fine-tuned control over the system's behavior. Here's how to use it:

+### Query Param

```python

-from lightrag import LightRAG, QueryParam

-

-# Initialize LightRAG

-rag = LightRAG(working_dir=WORKING_DIR)

-

-# Create query parameters

-query_param = QueryParam(

- mode="hybrid", # or other mode: "local", "global", "hybrid", "mix" and "naive"

-)

-

-# Example 1: Using the default system prompt

-response_default = rag.query(

- "What are the primary benefits of renewable energy?",

- param=query_param

-)

-print(response_default)

-

-# Example 2: Using a custom prompt

-custom_prompt = """

-You are an expert assistant in environmental science. Provide detailed and structured answers with examples.

----Conversation History---

-{history}

-

----Knowledge Base---

-{context_data}

-

----Response Rules---

-

-- Target format and length: {response_type}

-"""

-response_custom = rag.query(

- "What are the primary benefits of renewable energy?",

- param=query_param,

- system_prompt=custom_prompt # Pass the custom prompt

-)

-print(response_custom)

+class QueryParam:

+ mode: Literal["local", "global", "hybrid", "naive", "mix"] = "global"

+ """Specifies the retrieval mode:

+ - "local": Focuses on context-dependent information.

+ - "global": Utilizes global knowledge.

+ - "hybrid": Combines local and global retrieval methods.

+ - "naive": Performs a basic search without advanced techniques.

+ - "mix": Integrates knowledge graph and vector retrieval. Mix mode combines knowledge graph and vector search:

+ - Uses both structured (KG) and unstructured (vector) information

+ - Provides comprehensive answers by analyzing relationships and context

+ - Supports image content through HTML img tags

+ - Allows control over retrieval depth via top_k parameter

+ """

+ only_need_context: bool = False

+ """If True, only returns the retrieved context without generating a response."""

+ response_type: str = "Multiple Paragraphs"

+ """Defines the response format. Examples: 'Multiple Paragraphs', 'Single Paragraph', 'Bullet Points'."""

+ top_k: int = 60

+ """Number of top items to retrieve. Represents entities in 'local' mode and relationships in 'global' mode."""

+ max_token_for_text_unit: int = 4000

+ """Maximum number of tokens allowed for each retrieved text chunk."""

+ max_token_for_global_context: int = 4000

+ """Maximum number of tokens allocated for relationship descriptions in global retrieval."""

+ max_token_for_local_context: int = 4000

+ """Maximum number of tokens allocated for entity descriptions in local retrieval."""

+ ...

```

+> default value of Top_k can be change by environment variables TOP_K.

- Using Open AI-like APIs

+ Using Open AI-like APIs

* LightRAG also supports Open AI-like chat/embeddings APIs:

```python

@@ -243,7 +203,7 @@ rag = LightRAG(

- Using Hugging Face Models

+ Using Hugging Face Models

* If you want to use Hugging Face models, you only need to set LightRAG as follows:

@@ -274,7 +234,7 @@ rag = LightRAG(

- Using Ollama Models

+ Using Ollama Models

### Overview

If you want to use Ollama models, you need to pull model you plan to use and embedding model, for example `nomic-embed-text`.

@@ -353,36 +313,181 @@ In order to run this experiment on low RAM GPU you should select small model and

-### Query Param

+

+ Conversation History Support

+

+LightRAG now supports multi-turn dialogue through the conversation history feature. Here's how to use it:

```python

-class QueryParam:

- mode: Literal["local", "global", "hybrid", "naive", "mix"] = "global"

- """Specifies the retrieval mode:

- - "local": Focuses on context-dependent information.

- - "global": Utilizes global knowledge.

- - "hybrid": Combines local and global retrieval methods.

- - "naive": Performs a basic search without advanced techniques.

- - "mix": Integrates knowledge graph and vector retrieval.

- """

- only_need_context: bool = False

- """If True, only returns the retrieved context without generating a response."""

- response_type: str = "Multiple Paragraphs"

- """Defines the response format. Examples: 'Multiple Paragraphs', 'Single Paragraph', 'Bullet Points'."""

- top_k: int = 60

- """Number of top items to retrieve. Represents entities in 'local' mode and relationships in 'global' mode."""

- max_token_for_text_unit: int = 4000

- """Maximum number of tokens allowed for each retrieved text chunk."""

- max_token_for_global_context: int = 4000

- """Maximum number of tokens allocated for relationship descriptions in global retrieval."""

- max_token_for_local_context: int = 4000

- """Maximum number of tokens allocated for entity descriptions in local retrieval."""

- ...

+from lightrag import LightRAG, QueryParam

+

+# Initialize LightRAG

+rag = LightRAG(working_dir=WORKING_DIR)

+

+# Create conversation history

+conversation_history = [

+ {"role": "user", "content": "What is the main character's attitude towards Christmas?"},

+ {"role": "assistant", "content": "At the beginning of the story, Ebenezer Scrooge has a very negative attitude towards Christmas..."},

+ {"role": "user", "content": "How does his attitude change?"}

+]

+

+# Create query parameters with conversation history

+query_param = QueryParam(

+ mode="mix", # or any other mode: "local", "global", "hybrid"

+ conversation_history=conversation_history, # Add the conversation history

+ history_turns=3 # Number of recent conversation turns to consider

+)

+

+# Make a query that takes into account the conversation history

+response = rag.query(

+ "What causes this change in his character?",

+ param=query_param

+)

```

-> default value of Top_k can be change by environment variables TOP_K.

+

-### Batch Insert

+

+ Custom Prompt Support

+

+LightRAG now supports custom prompts for fine-tuned control over the system's behavior. Here's how to use it:

+

+```python

+from lightrag import LightRAG, QueryParam

+

+# Initialize LightRAG

+rag = LightRAG(working_dir=WORKING_DIR)

+

+# Create query parameters

+query_param = QueryParam(

+ mode="hybrid", # or other mode: "local", "global", "hybrid", "mix" and "naive"

+)

+

+# Example 1: Using the default system prompt

+response_default = rag.query(

+ "What are the primary benefits of renewable energy?",

+ param=query_param

+)

+print(response_default)

+

+# Example 2: Using a custom prompt

+custom_prompt = """

+You are an expert assistant in environmental science. Provide detailed and structured answers with examples.

+---Conversation History---

+{history}

+

+---Knowledge Base---

+{context_data}

+

+---Response Rules---

+

+- Target format and length: {response_type}

+"""

+response_custom = rag.query(

+ "What are the primary benefits of renewable energy?",

+ param=query_param,

+ system_prompt=custom_prompt # Pass the custom prompt

+)

+print(response_custom)

+```

+

+

+

+

+ Separate Keyword Extraction

+

+We've introduced a new function `query_with_separate_keyword_extraction` to enhance the keyword extraction capabilities. This function separates the keyword extraction process from the user's prompt, focusing solely on the query to improve the relevance of extracted keywords.

+

+##### How It Works?

+The function operates by dividing the input into two parts:

+- `User Query`

+- `Prompt`

+

+It then performs keyword extraction exclusively on the `user query`. This separation ensures that the extraction process is focused and relevant, unaffected by any additional language in the `prompt`. It also allows the `prompt` to serve purely for response formatting, maintaining the intent and clarity of the user's original question.

+

+##### Usage Example

+This `example` shows how to tailor the function for educational content, focusing on detailed explanations for older students.

+

+```python

+rag.query_with_separate_keyword_extraction(

+ query="Explain the law of gravity",

+ prompt="Provide a detailed explanation suitable for high school students studying physics.",

+ param=QueryParam(mode="hybrid")

+)

+```

+

+

+

+

+ Insert Custom KG

+

+```python

+rag = LightRAG(

+ working_dir=WORKING_DIR,

+ llm_model_func=llm_model_func,

+ embedding_func=EmbeddingFunc(

+ embedding_dim=embedding_dimension,

+ max_token_size=8192,

+ func=embedding_func,

+ ),

+)

+

+custom_kg = {

+ "entities": [

+ {

+ "entity_name": "CompanyA",

+ "entity_type": "Organization",

+ "description": "A major technology company",

+ "source_id": "Source1"

+ },

+ {

+ "entity_name": "ProductX",

+ "entity_type": "Product",

+ "description": "A popular product developed by CompanyA",

+ "source_id": "Source1"

+ }

+ ],

+ "relationships": [

+ {

+ "src_id": "CompanyA",

+ "tgt_id": "ProductX",

+ "description": "CompanyA develops ProductX",

+ "keywords": "develop, produce",

+ "weight": 1.0,

+ "source_id": "Source1"

+ }

+ ],

+ "chunks": [

+ {

+ "content": "ProductX, developed by CompanyA, has revolutionized the market with its cutting-edge features.",

+ "source_id": "Source1",

+ },

+ {

+ "content": "PersonA is a prominent researcher at UniversityB, focusing on artificial intelligence and machine learning.",

+ "source_id": "Source2",

+ },

+ {

+ "content": "None",

+ "source_id": "UNKNOWN",

+ },

+ ],

+}

+

+rag.insert_custom_kg(custom_kg)

+```

+

+

+## Insert

+

+#### Basic Insert

+

+```python

+# Basic Insert

+rag.insert("Text")

+```

+

+

+ Batch Insert

```python

# Basic Batch Insert: Insert multiple texts at once

@@ -404,7 +509,11 @@ The `insert_batch_size` parameter in `addon_params` controls how many documents

- Providing better progress tracking

- Default value is 10 if not specified

-### Incremental Insert

+

+

+

+

+ Incremental Insert

```python

# Incremental Insert: Insert new documents into an existing LightRAG instance

@@ -422,7 +531,11 @@ with open("./newText.txt") as f:

rag.insert(f.read())

```

-### Insert using Pipeline

+

+

+

+ Insert using Pipeline

+

The `apipeline_enqueue_documents` and `apipeline_process_enqueue_documents` functions allow you to perform incremental insertion of documents into the graph.

This is useful for scenarios where you want to process documents in the background while still allowing the main thread to continue executing.

@@ -436,28 +549,27 @@ await rag.apipeline_enqueue_documents(input)

await rag.apipeline_process_enqueue_documents(input)

```

-### Separate Keyword Extraction

-We've introduced a new function `query_with_separate_keyword_extraction` to enhance the keyword extraction capabilities. This function separates the keyword extraction process from the user's prompt, focusing solely on the query to improve the relevance of extracted keywords.

-

-##### How It Works?

-The function operates by dividing the input into two parts:

-- `User Query`

-- `Prompt`

+

-It then performs keyword extraction exclusively on the `user query`. This separation ensures that the extraction process is focused and relevant, unaffected by any additional language in the `prompt`. It also allows the `prompt` to serve purely for response formatting, maintaining the intent and clarity of the user's original question.

+

+ Insert Multi-file Type Support

-##### Usage Example

-This `example` shows how to tailor the function for educational content, focusing on detailed explanations for older students.

+The `textract` supports reading file types such as TXT, DOCX, PPTX, CSV, and PDF.

```python

-rag.query_with_separate_keyword_extraction(

- query="Explain the law of gravity",

- prompt="Provide a detailed explanation suitable for high school students studying physics.",

- param=QueryParam(mode="hybrid")

-)

+import textract

+

+file_path = 'TEXT.pdf'

+text_content = textract.process(file_path)

+

+rag.insert(text_content.decode('utf-8'))

```

+

+

+### Storage

-### Using Neo4J for Storage

+

+ Using Neo4J for Storage

* For production level scenarios you will most likely want to leverage an enterprise solution

* for KG storage. Running Neo4J in Docker is recommended for seamless local testing.

@@ -484,7 +596,11 @@ rag = LightRAG(

```

see test_neo4j.py for a working example.

-### Using PostgreSQL for Storage

+

+

+

+ Using PostgreSQL for Storage

+

For production level scenarios you will most likely want to leverage an enterprise solution. PostgreSQL can provide a one-stop solution for you as KV store, VectorDB (pgvector) and GraphDB (apache AGE).

* PostgreSQL is lightweight,the whole binary distribution including all necessary plugins can be zipped to 40MB: Ref to [Windows Release](/~https://github.com/ShanGor/apache-age-windows/releases/tag/PG17%2Fv1.5.0-rc0) as it is easy to install for Linux/Mac.

* If you prefer docker, please start with this image if you are a beginner to avoid hiccups (DO read the overview): https://hub.docker.com/r/shangor/postgres-for-rag

@@ -529,7 +645,11 @@ For production level scenarios you will most likely want to leverage an enterpri

>

> You can Compile the AGE from source code and fix it.

-### Using Faiss for Storage

+

+

+

+ Using Faiss for Storage

+

- Install the required dependencies:

```

pip install faiss-cpu

@@ -560,62 +680,8 @@ async def embedding_func(texts: list[str]) -> np.ndarray:

)

```

-### Insert Custom KG

-

-```python

-rag = LightRAG(

- working_dir=WORKING_DIR,

- llm_model_func=llm_model_func,

- embedding_func=EmbeddingFunc(

- embedding_dim=embedding_dimension,

- max_token_size=8192,

- func=embedding_func,

- ),

-)

-

-custom_kg = {

- "entities": [

- {

- "entity_name": "CompanyA",

- "entity_type": "Organization",

- "description": "A major technology company",

- "source_id": "Source1"

- },

- {

- "entity_name": "ProductX",

- "entity_type": "Product",

- "description": "A popular product developed by CompanyA",

- "source_id": "Source1"

- }

- ],

- "relationships": [

- {

- "src_id": "CompanyA",

- "tgt_id": "ProductX",

- "description": "CompanyA develops ProductX",

- "keywords": "develop, produce",

- "weight": 1.0,

- "source_id": "Source1"

- }

- ],

- "chunks": [

- {

- "content": "ProductX, developed by CompanyA, has revolutionized the market with its cutting-edge features.",

- "source_id": "Source1",

- },

- {

- "content": "PersonA is a prominent researcher at UniversityB, focusing on artificial intelligence and machine learning.",

- "source_id": "Source2",

- },

- {

- "content": "None",

- "source_id": "UNKNOWN",

- },

- ],

-}

+

-rag.insert_custom_kg(custom_kg)

-```

### Delete

```python

@@ -637,18 +703,6 @@ rag.delete_by_entity("Project Gutenberg")

rag.delete_by_doc_id("doc_id")

```

-### Multi-file Type Support

-

-The `textract` supports reading file types such as TXT, DOCX, PPTX, CSV, and PDF.

-

-```python

-import textract

-

-file_path = 'TEXT.pdf'

-text_content = textract.process(file_path)

-

-rag.insert(text_content.decode('utf-8'))

-```

### Graph Visualization

@@ -806,6 +860,9 @@ if __name__ == "__main__":

### LightRAG init parameters

+

+ Parameters

+

| **Parameter** | **Type** | **Explanation** | **Default** |

|----------------------------------------------| --- |-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|-------------------------------------------------------------------------------------------------------------|

| **working\_dir** | `str` | Directory where the cache will be stored | `lightrag_cache+timestamp` |

@@ -836,6 +893,8 @@ if __name__ == "__main__":

| **embedding\_cache\_config** | `dict` | Configuration for question-answer caching. Contains three parameters:

- `enabled`: Boolean value to enable/disable cache lookup functionality. When enabled, the system will check cached responses before generating new answers.

- `similarity_threshold`: Float value (0-1), similarity threshold. When a new question's similarity with a cached question exceeds this threshold, the cached answer will be returned directly without calling the LLM.

- `use_llm_check`: Boolean value to enable/disable LLM similarity verification. When enabled, LLM will be used as a secondary check to verify the similarity between questions before returning cached answers. | Default: `{"enabled": False, "similarity_threshold": 0.95, "use_llm_check": False}` |

|**log\_dir** | `str` | Directory to store logs. | `./` |

+

+

### Error Handling

diff --git a/contributor-README.md b/contributor-README.md

deleted file mode 100644

index 2168d4699..000000000

--- a/contributor-README.md

+++ /dev/null

@@ -1,12 +0,0 @@

-# Handy Tips for Developers Who Want to Contribute to the Project

-## Pre-commit Hooks

-Please ensure you have run pre-commit hooks before committing your changes.

-### Guides

-1. **Installing Pre-commit Hooks**:

- - Install pre-commit using pip: `pip install pre-commit`

- - Initialize pre-commit in your repository: `pre-commit install`

- - Run pre-commit hooks: `pre-commit run --all-files`

-

-2. **Pre-commit Hooks Configuration**:

- - Create a `.pre-commit-config.yaml` file in the root of your repository.

- - Add your hooks to the `.pre-commit-config.yaml`file.

+

+

+

+

+

+

+

+